Archive

Next Generation Multicast – NG-MVPN

Introduction

Multicast is a great technology that although it provides great benefits, is seldomly deployed. It’s a lot like IPv6 in that regard. Service providers or enterprises that run MPLS and want to provide multicast services have not been able to use MPLS to provide multicast Multicast has then typically been delivered by using Draft Rosen which is a mGRE technology to provide multicast. This post starts with a brief overview of Draft Rosen.

Draft Rosen

Draft Rosen uses GRE as an overlay protocol. That means that all multicast packets will be encapsulated inside GRE. A virtual LAN is emulated by having all PE routers in the VPN join a multicast group. This is known as the default Multicast Distribution Tree (MDT). The default MDT is used for PIM hello’s and other PIM signaling but also for data traffic. If the source sends a lot of traffic it is inefficient to use the default MDT and a data MDT can be created. The data MDT will only include PE’s that have receivers for the group in use.

Draft Rosen is fairly simple to deploy and works well but it has a few drawbacks. Let’s take a look at these:

- Overhead – GRE adds 24 bytes of overhead to the packet. Compared to MPLS which typically adds 8 or 12 bytes there is 100% or more of overhead added to each packet

- PIM in the core – Draft Rosen requires that PIM is enabled in the core because the PE’s must join the default and or data MDT which is done through PIM signaling. If PIM ASM is used in the core, an RP is needed as well. If PIM SSM is run in the core, no RP is needed.

- Core state – Unneccessary state is created in the core due to the PIM signaling from the PE’s. The core should have as little state as possible

- PIM adjacencies – The PE’s will become PIM neighbors with each other. If it’s a large VPN and a lot of PE’s, a lot of PIM adjacencies will be created. This will generate a lot of hello’s and other signaling which will add to the burden of the router

- Unicast vs multicast – Unicast forwarding uses MPLS, multicast uses GRE. This adds complexity and means that unicast is using a different forwarding mechanism than multicast, which is not the optimal solution

- Inefficency – The default MDT sends traffic to all PE’s in the VPN regardless if the PE has a receiver in the (*,G) or (S,G) for the group in use

Based on this list, it is clear that there is a room for improvement. The things we are looking to achieve with another solution is:

- Shared control plane with unicast

- Less protocols to manage in the core

- Shared forwarding plane with unicast

- Only use MPLS as encapsulation

- Fast Restoration (FRR)

NG-MVPN

To be able to build multicast Label Switched Paths (LSPs) we need to provide these labels in some way. There are three main options to provide these labels today:

- Multipoint LDP(mLDP)

- RSVP-TE P2MP

- Unicast MPLS + Ingress Replication(IR)

MLDP is an extension to the familiar Label Distribution Protocol (LDP). It supports both P2MP and MP2MP LSPs and is defined in RFC 6388.

RSVP-TE is an extension to the unicast RSVP-TE which some providers use today to build LSPs as opposed to LDP. It is defined in RFC 4875.

Unicast MPLS uses unicast and no additional signaling in the core. It does not use a multipoint LSP.

Multipoint LSP

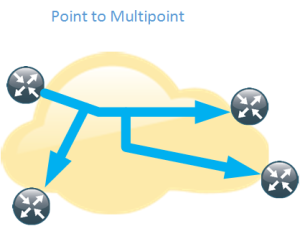

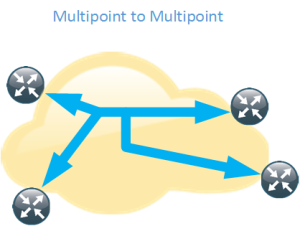

Normal unicast forwarding through MPLS uses a point to point LSP. This is not efficient for multicast. To overcome this, multipoint LSPs are used instead. There are two different types, point to multipoint and multipoint to multipoint.

- Replication of traffic in core

- Allows only the root of the P2MP LSP to inject packets into the tree

- If signaled with mLDP – Path based on IP routing

- If signaled with RSVP-TE – Constraint-based/explicit routing. RSVP-TE also supports admission control

- Replication of traffic in core

- Bidirectional

- All the leafs of the LSP can inject and receive packets from the LSP

- Signaled with mLDP

- Path based on IP routing

Core Tree Types

Depending on the number of sources and where the sources are located, different type of core trees can be used. If you are familiar with Draft Rosen, you may know of the default MDT and the data MDT.

Signalling the Labels

As mentioned previously there are three main ways of signalling the labels. We will start by looking at mLDP.

- LSPs are built from the leaf to the root

- Supports P2MP and MP2MP LSPs

- mLDP with MP2MP provides great scalability advantages for “any to any” topologies

- “any to any” communication applications:

- mVPN supporting bidirectional PIM

- mVPN Default MDT model

- If a provider does not want tree state per ingress PE source

- “any to any” communication applications:

- mLDP with MP2MP provides great scalability advantages for “any to any” topologies

- Supports Fast Reroute (FRR) via RSVP-TE unicast backup path

- No periodic signaling, reliable using TCP

- Control plane is P2MP or MP2MP

- Data plane is P2MP

- Scalable due to receiver driven tree building

- Supports MP2MP

- Does not support traffic engineering

RSVP-TE can be used as well with the following characteristics.

- LSPs are built from the head-end to the tail-end

- Supports only P2MP LSPs

- Supports traffic engineering

- Bandwidth reservation

- Explicit routing

- Fast Reroute (FRR)

- Signaling is periodic

- P2P technology at control plane

- Inherits P2P scaling limitations

- P2MP at the data plane

- Packet replication in the core

RSVP-TE will mostly be interesting for SPs that are already running RSVP-TE for unicast or for SPs involved in video delivery. The following table shows a comparision of the different protocols.

Assigning Flows to LSPs

After the LSPs have been signalled, we need to get traffic onto the LSPs. This can be done in several different ways.

- Static

- PIM

- RFC 6513

- BGP Customer Multicast (C-Mcast)

- RFC 6514

- Also describes Auto-Discovery

- mLDP inband signaling

- RFC 6826

Static

- Mostly applicable to RSVP-TE P2MP

- Static configuration of multicast flows per LSP

- Allows aggregation of multiple flows in a single LSP

PIM

- Dynamically assigns flows to an LSP by running PIM over the LSP

- Works over MP2MP and PPMP LSP types

- Mostly used but not limited to default MDT

- No changes needed to PIM

- Allows aggregation of multiple flows in a single LSP

BGP Auto-Discovery

- Auto-Discovery

- The process of discovering all the PE’s with members in a given mVPN

- Used to establish the MDT in the SP core

- Can also be used to discover set of PE’s interested in a given customer multicast group (to enable S-PSMSI creation)

- S-PMSI = Data MDT

- Used to advertise address of the originating PE and tunnel attribute information (which kind of tunnel)

BGP MVPN Address Family

- MPBGP extensions to support mVPN address family

- Used for advertisement of AD routes

- Used for advertisement of C-mcast routes (*,G) and (S,G)

- Two new extended communities

- VRF route import – Used to import mcast routes, similar to RT for unicast routes

- Source AS – Used for inter-AS mVPN

- New BGP attributes

- PMSI Tunnel Attribute (PTA) – Contains information about advertised tunnel

- PPMP label attribute – Upstream generated label used by the downstream clients to send unicast messages towards the source

- If mVPN address family is not used the address family ipv4 mdt must be used

BGP Customer Multicast

- BGP Customer Multicast (C-mcast) signalling on overlay

- Tail-end driven updates is not a natural fit for BGP

- BGP is more suited for one-to-many not many-to-one

- PIM is still the PE-CE protocol

- Easy to use with SSM

- Complex to understand and troubleshoot for ASM

MLDP Inband Signaling

- Multicast flow information encoded in the mLDP FEC

- Each customer mcast flow creates state on the core routers

- Scaling is the same as with default MDT with every C-(S,G) on a Data MDT

- IPv4 and IPv6 multicast in global or VPN context

- Typical for SSM or PIM sparse mode sources

- IPTV walled garden deployment

- RFC 6826

The natural choice is to stick with PIM unless you need very high scalability. Here is a comparison of PIM and BGP.

BGP C-Signaling

- With C-PIM signaling on default MDT models, data needs to be monitored

- On default/data tree to detect duplicate forwarders over MDT and to trigger the assert process

- On default MDT to perform SPT switchover (from (*,G) to (S,G))

- On default MDT models with C-BGP signaling

- There is only one forwarder on MDT

- There are no asserts

- The BGP type 5 routes are used for SPT switchover on PEs

- There is only one forwarder on MDT

- Type 4 leaf AD route used to track type 3 S-PMSI (Data MDT) routes

- Needed when RR is deployed

- If source PE sets leaf-info-required flag on type 3 routes, the receiver PE responds with with a type 4 route

Migration

If PIM is used in the core, this can be migrated to mLDP. PIM can also be migrated to BGP. This can be done per multicast source, per multicast group and per source ingress router. This means that migration can be done gradually so that not all core trees must be replaced at the same time.

It is also possible to have both mGRE and MPLS encapsulation in the network for different PE’s.

To summarize the different options for assigning flows to LSPs

- Static

- Mostly applicable to RSVP-TE

- PIM

- Well known, has been in use since mVPN introduction over GRE

- BGP A-D

- Useful where head-end assigns the flows to the LSP

- BGP C-mcast

- Alternative to PIM in mVPN context

- May be required in dual vendor networks

- MLDP inband signaling

- Method to stitch a PIM tree to a mLDP LSP without any additional signaling

Optimizing the MDT

There are some drawbacks with the normal operation of the MDT. The tree is signalled even if there is no customer traffic leading to unneccessary state in the core. To overcome these limitations there is a model called the partitioned MDT running over mLDP with the following characteristics.

- Dynamic version of default MDT model

- MDT is only built when customer traffic needs to be transported across the core

- It addresses issues with the default MDT model

- Optimizes deployments where sources are located in a few sites

- Supports anycast sources

- Default MDT would use PIM asserts

- Reduces the number of PIM neighbors

- PIM neighborship is unidirectional – The egress PE sees ingress PEs as PIM neighbors

Conclusion

There are many many different profiles supported, currently 27 profiles on Cisco equipment. Here are some guidelines to guide you in the selection of a profile for NG-MVPN.

- Label Switched Multicast (LSM) provides unified unicast and multicast forwarding

- Choosing a profile depends on the application and scalability/feature requirements

- MLDP is the natural and safe choice for general purpose

- Inband signalling is for walled garden deployments

- Partitioned MDT is most suitable if there are few sources/few sites

- P2MP TE is used for bandwidth reservation and video distribution (few source sites)

- Default MDT model is for anyone (else)

- PIM is still used as the PE-CE protocol towards the customer

- PIM or BGP can be used as an overlay protocol unless inband signaling or static mapping is used

- BGP is the natural choice for high scalability deployments

- BGP may be the natural choice if already using it for Auto-Discovery

- The beauty of NG-MVPN is that profile can be selected per customer/VPN

- Even per source, per group or per next-hop can be done with Routing Policy Language (RPL)

This post was heavily inspired and is basically a summary of the Cisco Live session BRKIPM-3017 mVPN Deployment Models by Ijsbrand Wijnands and Luc De Ghein. I recommend that you read it for more details and configuration of NG-MVPN.

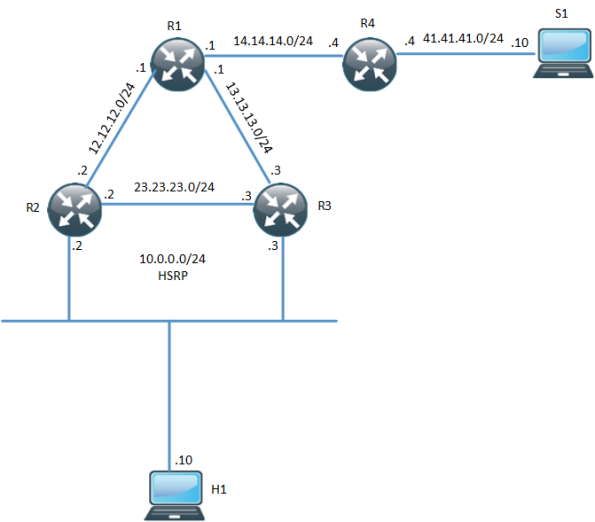

HSRP AWARE PIM

In environments that require redundancy towards clients, HSRP will normally be running. HSRP is a proven protocol and it works but how do we handle when we have clients that need multicast? What triggers multicast to converge when the Active Router (AR) goes down? The following topology is used:

One thing to notice here is that R3 is the PIM DR even though R2 is the HSRP AR. The network has been setup with OSPF, PIM and R1 is the RP. Both R2 and R3 will receive IGMP reports but only R3 will send PIM Join, due to it being the PIM DR. R3 builds the (*,G) towards the RP:

R3#sh ip mroute 239.0.0.1

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.0.0.1), 02:54:15/00:02:20, RP 1.1.1.1, flags: SJC

Incoming interface: Ethernet0/0, RPF nbr 13.13.13.1

Outgoing interface list:

Ethernet0/2, Forward/Sparse, 00:25:59/00:02:20

We then ping 239.0.0.1 from the multicast source to build the (S,G):

S1#ping 239.0.0.1 re 3 Type escape sequence to abort. Sending 3, 100-byte ICMP Echos to 239.0.0.1, timeout is 2 seconds: Reply to request 0 from 10.0.0.10, 35 ms Reply to request 1 from 10.0.0.10, 1 ms Reply to request 2 from 10.0.0.10, 2 ms

The (S,G) has been built:

R3#sh ip mroute 239.0.0.1

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.0.0.1), 02:57:14/stopped, RP 1.1.1.1, flags: SJC

Incoming interface: Ethernet0/0, RPF nbr 13.13.13.1

Outgoing interface list:

Ethernet0/2, Forward/Sparse, 00:28:58/00:02:50

(41.41.41.10, 239.0.0.1), 00:02:03/00:00:56, flags: JT

Incoming interface: Ethernet0/0, RPF nbr 13.13.13.1

Outgoing interface list:

Ethernet0/2, Forward/Sparse, 00:02:03/00:02:50

The unicast and multicast topology is not currently congruent, this may or may not be important. What happens when R3 fails?

R3(config)#int e0/2 R3(config-if)#sh R3(config-if)#

No replies to the pings coming in until PIM on R2 detects that R3 is gone and takes over the DR role, this will take between 60 to 90 seconds with the default timers in use.

S1#ping 239.0.0.1 re 100 ti 1 Type escape sequence to abort. Sending 100, 100-byte ICMP Echos to 239.0.0.1, timeout is 1 seconds: Reply to request 0 from 10.0.0.10, 18 ms Reply to request 1 from 10.0.0.10, 2 ms.................................................................... ....... Reply to request 77 from 10.0.0.10, 10 ms Reply to request 78 from 10.0.0.10, 1 ms Reply to request 79 from 10.0.0.10, 1 ms Reply to request 80 from 10.0.0.10, 1 ms

We can increase the DR priority on R2 to make it become the DR.

R2(config-if)#ip pim dr-priority 50 *Feb 13 12:42:45.900: %PIM-5-DRCHG: DR change from neighbor 10.0.0.3 to 10.0.0.2 on interface Ethernet0/2

HSRP aware PIM is a feature that started appearing in IOS 15.3(1)T and makes the HSRP AR become the PIM DR. It will also send PIM messages from the virtual IP which is useful in situations where you have a router with a static route towards an Virtual IP (VIP). This is how Cisco describes the feature:

HSRP Aware PIM enables multicast traffic to be forwarded through the HSRP active router (AR), allowing PIM to leverage HSRP redundancy, avoid potential duplicate traffic, and enable failover, depending on the HSRP states in the device. The PIM designated router (DR) runs on the same gateway as the HSRP AR and maintains mroute states.

In my topology, I am running HSRP towards the clients, so even though this feature sounds as a perfect fit it will not help me in converging my multicast. Let’s configure this feature on R2:

R2(config-if)#ip pim redundancy HSRP1 hsrp dr-priority 100 R2(config-if)# *Feb 13 12:48:20.024: %PIM-5-DRCHG: DR change from neighbor 10.0.0.3 to 10.0.0.2 on interface Ethernet0/2

R2 is now the PIM DR, R3 will now see two PIM neighbors on interface E0/2:

R3#sh ip pim nei e0/2

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

P - Proxy Capable, S - State Refresh Capable, G - GenID Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

10.0.0.1 Ethernet0/2 00:00:51/00:01:23 v2 0 / S P G

10.0.0.2 Ethernet0/2 00:07:24/00:01:23 v2 100/ DR S P G

R2 now has the (S,G) and we can see that it was the Assert winner because R3 was previously sending multicasts to the LAN segment.

R2#sh ip mroute 239.0.0.1

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.0.0.1), 00:20:31/stopped, RP 1.1.1.1, flags: SJC

Incoming interface: Ethernet0/0, RPF nbr 12.12.12.1

Outgoing interface list:

Ethernet0/2, Forward/Sparse, 00:16:21/00:02:35

(41.41.41.10, 239.0.0.1), 00:00:19/00:02:40, flags: JT

Incoming interface: Ethernet0/0, RPF nbr 12.12.12.1

Outgoing interface list:

Ethernet0/2, Forward/Sparse, 00:00:19/00:02:40, A

What happens when R2’s LAN interface goes down? Will R3 become the DR? And how fast will it converge?

R2(config)#int e0/2 R2(config-if)#sh

HSRP changes to active on R3 but the PIM DR role does not converge until the PIM query interval has expired (3x hellos).

*Feb 13 12:51:44.204: HSRP: Et0/2 Grp 1 Redundancy "hsrp-Et0/2-1" state Standby -> Active

R3#sh ip pim nei e0/2

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

P - Proxy Capable, S - State Refresh Capable, G - GenID Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

10.0.0.1 Ethernet0/2 00:04:05/00:00:36 v2 0 / S P G

10.0.0.2 Ethernet0/2 00:10:39/00:00:36 v2 100/ DR S P G

R3#

*Feb 13 12:53:02.013: %PIM-5-NBRCHG: neighbor 10.0.0.2 DOWN on interface Ethernet0/2 DR

*Feb 13 12:53:02.013: %PIM-5-DRCHG: DR change from neighbor 10.0.0.2 to 10.0.0.3 on interface Ethernet0/2

*Feb 13 12:53:02.013: %PIM-5-NBRCHG: neighbor 10.0.0.1 DOWN on interface Ethernet0/2 non DR

We lose a lot of packets while waiting for PIM to converge:

S1#ping 239.0.0.1 re 100 time 1 Type escape sequence to abort. Sending 100, 100-byte ICMP Echos to 239.0.0.1, timeout is 1 seconds: Reply to request 0 from 10.0.0.10, 5 ms Reply to request 0 from 10.0.0.10, 14 ms................................................................... Reply to request 68 from 10.0.0.10, 10 ms Reply to request 69 from 10.0.0.10, 2 ms Reply to request 70 from 10.0.0.10, 1 ms

HSRP aware PIM didn’t really help us here… So when is it useful? If we use the following topology instead:

The router R5 has been added and the receiver sits between R5 instead. R5 does not run routing with R2 and R3, only static routes pointing at the RP and the multicast source:

R5(config)#ip route 1.1.1.1 255.255.255.255 10.0.0.1 R5(config)#ip route 41.41.41.0 255.255.255.0 10.0.0.1

Without HSRP aware PIM, the RPF check would fail because PIM would peer with the physical address but R5 sees three neighbors on the segment, where one is the VIP:

R5#sh ip pim nei

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

P - Proxy Capable, S - State Refresh Capable, G - GenID Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

10.0.0.2 Ethernet0/0 00:03:00/00:01:41 v2 100/ DR S P G

10.0.0.1 Ethernet0/0 00:03:00/00:01:41 v2 0 / S P G

10.0.0.3 Ethernet0/0 00:03:00/00:01:41 v2 1 / S P G

R2 will be the one forwarding multicast during normal conditions due to it being the PIM DR via HSRP state of active router:

R2#sh ip mroute 239.0.0.1

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.0.0.1), 00:02:12/00:02:39, RP 1.1.1.1, flags: S

Incoming interface: Ethernet0/0, RPF nbr 12.12.12.1

Outgoing interface list:

Ethernet0/2, Forward/Sparse, 00:02:12/00:02:39

Let’s try a ping from the source:

S1#ping 239.0.0.1 re 3 Type escape sequence to abort. Sending 3, 100-byte ICMP Echos to 239.0.0.1, timeout is 2 seconds: Reply to request 0 from 20.0.0.10, 1 ms Reply to request 1 from 20.0.0.10, 2 ms Reply to request 2 from 20.0.0.10, 2 ms

The ping works and R2 has the (S,G):

R2#sh ip mroute 239.0.0.1

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.0.0.1), 00:04:18/00:03:29, RP 1.1.1.1, flags: S

Incoming interface: Ethernet0/0, RPF nbr 12.12.12.1

Outgoing interface list:

Ethernet0/2, Forward/Sparse, 00:04:18/00:03:29

(41.41.41.10, 239.0.0.1), 00:01:35/00:01:24, flags: T

Incoming interface: Ethernet0/0, RPF nbr 12.12.12.1

Outgoing interface list:

Ethernet0/2, Forward/Sparse, 00:01:35/00:03:29

What happens when R2 fails?

R2#conf t Enter configuration commands, one per line. End with CNTL/Z. R2(config)#int e0/2 R2(config-if)#sh R2(config-if)#

S1#ping 239.0.0.1 re 200 ti 1 Type escape sequence to abort. Sending 200, 100-byte ICMP Echos to 239.0.0.1, timeout is 1 seconds: Reply to request 0 from 20.0.0.10, 9 ms Reply to request 1 from 20.0.0.10, 2 ms Reply to request 1 from 20.0.0.10, 11 ms.................................................................... ...................................................................... ............................................................

The pings time out because when the PIM Join from R5 comes in, R3 does not realize that it should process the Join.

*Feb 13 13:20:13.236: PIM(0): Received v2 Join/Prune on Ethernet0/2 from 10.0.0.5, not to us *Feb 13 13:20:32.183: PIM(0): Generation ID changed from neighbor 10.0.0.2

As it turns out, the PIM redundancy command must be configured on the secondary router as well for it to process PIM Joins to the VIP.

R3(config-if)#ip pim redundancy HSRP1 hsrp dr-priority 10

After this has configured, the incoming Join will be processed. R3 triggers R5 to send a new Join because the GenID is set in the PIM hello to a new value.

*Feb 13 13:59:19.333: PIM(0): Matched redundancy group VIP 10.0.0.1 on Ethernet0/2 Active, processing the Join/Prune, to us

*Feb 13 13:40:34.043: PIM(0): Generation ID changed from neighbor 10.0.0.1

After configuring this, the PIM DR role converges as fast as HSRP allows. I’m using BFD in this scenario.

The key concept for understanding HSRP aware PIM here is that:

- Initially configuring PIM redundancy on the AR will make it the DR

- PIM redundancy must be configured on the secondary router as well, otherwise it will not process PIM Joins to the VIP

- The PIM DR role does not converge until PIM hellos have timed out, the secondary router will process the Joins though so the multicast will converge

This feature is not very well documented so I hope you have learned a bit from this post how this feature really works. This feature does not work when you have receiver on a HSRP LAN, because the DR role is NOT moved until PIM adjacency expires.

The Tale of the Mysterious PIM Prune

Christmas is lurking around the corner and in the spirit of Denise “Fish” Fishburne, I give you the “The Tale of the Mysterious PIM Prune”.

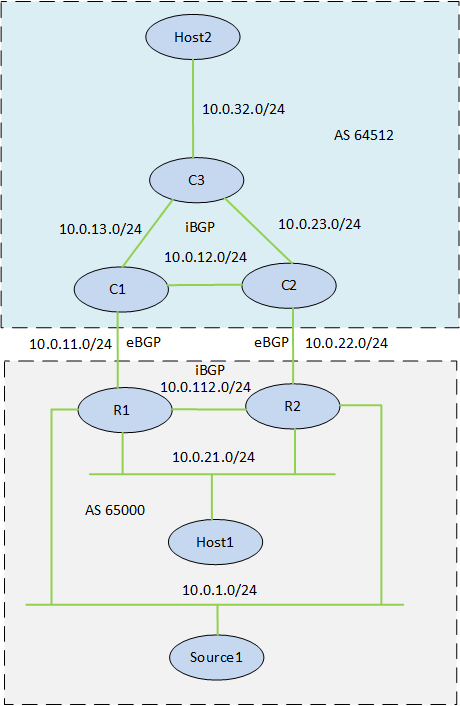

I have been working a lot with multicast lately which is also why I’ve blogged about it. To start off this story, let’s begin with a network topology.

The multicast source is located in AS 65000 and contains two routers that are connected to the multicast source. The routers run BFD, OSPF, iBGP, PIM internally and the RP is located on C1. There is a local receiver in AS 65000 and a remote one in AS 64512. The networks 10.0.1.0/24 and 10.0.21.0/24 come off the same physical interface. If you want to replicate this lab, all the configs are provided here.

This network requires fast convergence and I have been troubleshooting a scenario where the active multicast router (R1) has its LAN interface go down, meaning that the traffic from the source must come in on R2. In this scenario I have seen convergence in up to 60 seconds which is not acceptable. The BGP design is for R2 to still exit out via R1 if the link is available towards C1. The picture below shows the normal multicast flow.

When R1 has its LAN interface go down, the traffic will pass from R2 over the link to R1 and out to C1.

R1 and R2 have a default route learned via BGP that points at C1. This will be an important piece of the puzzle later. Let’s go through what happens, step by step when R1 has its LAN interface go down. To simulate the multicast traffic I have an Ubuntu machine acting as the source. The receivers are CSR1000v routers with debug ip icmp to see how often the traffic is coming in. I’m sending ICMP packets every 100 ms.

*Dec 14 19:48:08.922: HSRP: Gi3.1 Interface DOWN

The interface on R1 goes down.

*Dec 14 19:48:08.926: is_up: GigabitEthernet3.1 0 state: 6 sub state: 1 line: 1 *Dec 14 19:48:08.926: RT: interface GigabitEthernet3.1 removed from routing table *Dec 14 19:48:08.926: RT: del 10.0.1.0 via 0.0.0.0, connected metric [0/0] *Dec 14 19:48:08.926: RT: delete subnet route to 10.0.1.0/24 *Dec 14 19:48:08.926: is_up: GigabitEthernet3.1 0 state: 6 sub state: 1 line: 1 *Dec 14 19:48:08.927: RT(multicast): interface GigabitEthernet3.1 removed from routing table *Dec 14 19:48:08.927: RT(multicast): del 10.0.1.0 via 0.0.0.0, connected metric [0/0] *Dec 14 19:48:08.927: RT(multicast): delete subnet route to 10.0.1.0/24 *Dec 14 19:48:08.927: RT: del 10.0.1.2 via 0.0.0.0, connected metric [0/0] *Dec 14 19:48:08.927: RT: delete subnet route to 10.0.1.2/32 *Dec 14 19:48:08.927: RT(multicast): del 10.0.1.2 via 0.0.0.0, connected metric [0/0] *Dec 14 19:48:08.927: RT(multicast): delete subnet route to 10.0.1.2/32

It takes roughly 5 ms to remove the route from the RIB and the MRIB.

*Dec 14 19:48:08.935: RT: add 10.0.1.0/24 via 10.0.255.2, bgp metric [200/0]

*Dec 14 19:48:08.936: RT: updating bgp 10.0.21.0/24 (0x0) :

via 10.0.255.2 0 1048577

R1 starts to install the route to the source via R2 into the RIB, but the route is not yet installed! This is a key concept.

*Dec 14 19:48:08.937: MRT(0): Delete GigabitEthernet1/239.0.0.1 from the olist of (10.0.1.10, 239.0.0.1)

*Dec 14 19:48:08.937: MRT(0): Reset the PIM interest flag for (10.0.1.10, 239.0.0.1)

*Dec 14 19:48:08.938: MRT(0): set min mtu for (10.0.1.10, 239.0.0.1) 1500->1500

*Dec 14 19:48:08.938: MRT(0): (10.0.1.10,239.0.0.1), RPF change from GigabitEthernet3.1/0.0.0.0 to GigabitEthernet1/10.0.11.1

*Dec 14 19:48:08.938: MRT(0): Reset the F-flag for (10.0.1.10, 239.0.0.1)

*Dec 14 19:48:08.938: PIM(0): Insert (10.0.1.10,239.0.0.1) join in nbr 10.0.11.1's queue

*Dec 14 19:48:08.938: PIM(0): Building Join/Prune packet for nbr 10.0.11.1

*Dec 14 19:48:08.938: PIM(0): Adding v2 (10.0.1.10/32, 239.0.0.1), S-bit Join

*Dec 14 19:48:08.938: PIM(0): Send v2 join/prune to 10.0.11.1 (GigabitEthernet1)

*Dec 14 19:48:08.938: RT: updating bgp 10.0.1.0/24 (0x0) :

via 10.0.255.2 0 1048577

R1 cleans up some multicast state and then it sends a PIM Join towards C1, but the source is not located in that direction! The giveaway here is the message about RPF change and 0.0.0.0 which is the default route pointing towards C1. This default route is already installed into the RIB so the PIM Join is sent out the RPF interface which for a brief period happens to be towards C1. The receiver is still located in that direction though.

*Dec 14 19:48:08.938: RT: closer admin distance for 10.0.1.0, flushing 1 routes *Dec 14 19:48:08.938: RT: add 10.0.1.0/24 via 10.0.255.2, bgp metric [200/0] *Dec 14 19:48:08.939: MRT(0): (10.0.1.10,239.0.0.1), RPF change from GigabitEthernet1/10.0.11.1 to GigabitEthernet2/10.0.112.2 *Dec 14 19:48:08.939: PIM(0): Insert (10.0.1.10,239.0.0.1) join in nbr 10.0.112.2's queue *Dec 14 19:48:08.939: PIM(0): Insert (10.0.1.10,239.0.0.1) prune in nbr 10.0.11.1's queue *Dec 14 19:48:08.939: PIM(0): Building Join/Prune packet for nbr 10.0.11.1 *Dec 14 19:48:08.939: PIM(0): Adding v2 (10.0.1.10/32, 239.0.0.1), S-bit Prune *Dec 14 19:48:08.939: PIM(0): Send v2 join/prune to 10.0.11.1 (GigabitEthernet1) *Dec 14 19:48:08.939: PIM(0): Building Join/Prune packet for nbr 10.0.112.2 *Dec 14 19:48:08.939: PIM(0): Adding v2 (10.0.1.10/32, 239.0.0.1), S-bit Join *Dec 14 19:48:08.939: PIM(0): Send v2 join/prune to 10.0.112.2 (GigabitEthernet2)

R1 then installs the route via R2, it tries to cover its tracks by pruning off the interface towards C1. Hold on a second here though, isn’t that where the receiver is located? Indeed it is, this means the (S,G) tree is broken until C1 sends a periodic Join which could take from 0-60 seconds depending on when the last Join came in. The arrows on the topology shows the events in order, first a Join towards C1, then a Prune towards C1, then a Join towards R2.

*Dec 14 19:48:09.932: PIM(0): Insert (10.0.1.10,239.0.0.1) prune in nbr 10.0.112.2's queue - deleted *Dec 14 19:48:09.932: PIM(0): Building Join/Prune packet for nbr 10.0.112.2 *Dec 14 19:48:09.932: PIM(0): Adding v2 (10.0.1.10/32, 239.0.0.1), S-bit Prune *Dec 14 19:48:09.932: PIM(0): Send v2 join/prune to 10.0.112.2 (GigabitEthernet2)

R1 no longer has any receivers interested in the traffic, it pruned off the only remaining receiver located towards C1 so it sends a Prune towards R2.

*Dec 14 19:48:50.864: PIM(0): Received v2 Join/Prune on GigabitEthernet1 from 10.0.11.1, to us *Dec 14 19:48:50.864: PIM(0): Join-list: (10.0.1.10/32, 239.0.0.1), S-bit set *Dec 14 19:48:50.864: MRT(0): WAVL Insert interface: GigabitEthernet1 in (10.0.1.10,239.0.0.1) Successful *Dec 14 19:48:50.864: MRT(0): set min mtu for (10.0.1.10, 239.0.0.1) 18010->1500 *Dec 14 19:48:50.864: MRT(0): Add GigabitEthernet1/239.0.0.1 to the olist of (10.0.1.10, 239.0.0.1), Forward state - MAC built *Dec 14 19:48:50.864: PIM(0): Add GigabitEthernet1/10.0.11.1 to (10.0.1.10, 239.0.0.1), Forward state, by PIM SG Join *Dec 14 19:48:50.864: MRT(0): Add GigabitEthernet1/239.0.0.1 to the olist of (10.0.1.10, 239.0.0.1), Forward state - MAC built *Dec 14 19:48:50.864: MRT(0): Set the PIM interest flag for (10.0.1.10, 239.0.0.1) *Dec 14 19:48:50.864: PIM(0): Insert (10.0.1.10,239.0.0.1) join in nbr 10.0.112.2's queue *Dec 14 19:48:50.864: PIM(0): Building Join/Prune packet for nbr 10.0.112.2 *Dec 14 19:48:50.864: PIM(0): Adding v2 (10.0.1.10/32, 239.0.0.1), S-bit Join *Dec 14 19:48:50.864: PIM(0): Send v2 join/prune to 10.0.112.2 (GigabitEthernet2)

C1 is still interested in this multicast traffic, it sends a periodic Join towards R1 which then triggers R1 to send a Join towards R2. There was roughly a 40 second delay between these events. This can also be seen from the ping that I had running.

*Dec 14 19:48:08.842: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 19:48:08.942: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 19:48:09.042: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 19:48:51.054: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 19:48:51.158: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 19:48:51.255: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 19:48:51.355: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0

This story shows how the unicast routing table and a race condition can effect your multicast traffic. What would a story be without a happy ending though? What can we do to solve the race condition?

The key here is that the default route in already installed into the RIB. To beat it we will have to put a longer match into the RIB or into the MRIB. This can be done by putting a static unicast route or a static multicast route. I prefer to use a static multicast route since that will have no effect on unicast traffic.

ip mroute 10.0.1.0 255.255.255.0 10.0.112.2

or

ip route 10.0.1.0 255.255.255.0 10.0.112.2

The connected route is the best route until R1 has its LAN interface go down. The MRIB will then use the next match which is 10.0.1.0 via 10.0.112.2. Let’s run another test now that we have altered the MRIB.

*Dec 14 20:29:03.128: HSRP: Gi3.1 Interface going DOWN

Interface goes down.

*Dec 14 20:29:03.142: is_up: GigabitEthernet3.1 0 state: 6 sub state: 1 line: 1 *Dec 14 20:29:03.142: RT: interface GigabitEthernet3.1 removed from routing table *Dec 14 20:29:03.142: RT: del 10.0.1.0 via 0.0.0.0, connected metric [0/0] *Dec 14 20:29:03.142: RT: delete subnet route to 10.0.1.0/24 *Dec 14 20:29:03.143: is_up: GigabitEthernet3.1 0 state: 6 sub state: 1 line: 1 *Dec 14 20:29:03.143: RT(multicast): interface GigabitEthernet3.1 removed from routing table *Dec 14 20:29:03.143: RT(multicast): del 10.0.1.0 via 0.0.0.0, connected metric [0/0] *Dec 14 20:29:03.143: RT(multicast): delete subnet route to 10.0.1.0/24 *Dec 14 20:29:03.143: RT: del 10.0.1.2 via 0.0.0.0, connected metric [0/0] *Dec 14 20:29:03.143: RT: delete subnet route to 10.0.1.2/32 *Dec 14 20:29:03.143: RT(multicast): del 10.0.1.2 via 0.0.0.0, connected metric [0/0] *Dec 14 20:29:03.143: RT(multicast): delete subnet route to 10.0.1.2/32

Remove 10.0.1.0/24 from the RIB and MRIB.

*Dec 14 20:29:03.150: RT: add 10.0.1.0/24 via 10.0.255.2, bgp metric [200/0]

*Dec 14 20:29:03.150: RT: updating bgp 10.0.21.0/24 (0x0) :

via 10.0.255.2 0 1048577

*Dec 14 20:29:03.150: RT: add 10.0.21.0/24 via 10.0.255.2, bgp metric [200/0]

Start installing the BGP route.

*Dec 14 20:29:03.152: MRT(0): (10.0.1.10,239.0.0.1), RPF change from GigabitEthernet3.1/0.0.0.0 to GigabitEthernet2/10.0.112.2

*Dec 14 20:29:03.152: MRT(0): Reset the F-flag for (10.0.1.10, 239.0.0.1)

*Dec 14 20:29:03.152: PIM(0): Insert (10.0.1.10,239.0.0.1) join in nbr 10.0.112.2's queue

*Dec 14 20:29:03.152: PIM(0): Building Join/Prune packet for nbr 10.0.112.2

*Dec 14 20:29:03.152: PIM(0): Adding v2 (10.0.1.10/32, 239.0.0.1), S-bit Join

*Dec 14 20:29:03.152: PIM(0): Send v2 join/prune to 10.0.112.2 (GigabitEthernet2)

*Dec 14 20:29:03.153: RT: updating bgp 10.0.1.0/24 (0x0) :

via 10.0.255.2 0 1048577

*Dec 14 20:29:03.153: RT: closer admin distance for 10.0.1.0, flushing 1 routes

*Dec 14 20:29:03.153: RT: add 10.0.1.0/24 via 10.0.255.2, bgp metric [200/0]

The RPF interface changes but now points towards the correct destination, which is R2. The multicast traffic can flow again. How many packets did we lose?

*Dec 14 20:29:02.017: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:02.115: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:02.216: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:02.317: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:02.418: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:02.519: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:02.620: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:02.720: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:02.820: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:02.920: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:03.021: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:03.122: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:03.223: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:03.324: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:03.424: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:03.526: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:03.626: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:03.727: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:03.828: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0 *Dec 14 20:29:03.927: ICMP: echo reply sent, src 10.0.32.10, dst 10.0.1.10, topology BASE, dscp 0 topoid 0

No packets lost! I would probably have to send packets more often than every 100ms to catch the tree converging here. In a real network you would see some delay because pulling the cable is different than shutting down an interface, the carrier delay would come into play here. Here are some key concepts you should learn from this post.

- Unicast routing table will impact the multicast routing table

- Never assume anything, verify

- Installing routes takes time

There are a lot of moving parts here. If you don’t understand it all at once, don’t worry. It’s a complex scenario and don’t be afraid to ask questions in the comments section. Here are the key concepts of converging in this scenario.

- Interface goes down (0-2 seconds)

- Remove stale route (14 ms)

- Install new route (3 ms)

- RPF change/PIM Join (2 ms)

These are values from my tests and you will see higher values on production equipment. Because PIM Joins are triggered by the routing table changing, convergence can be very fast depending on how fast you detect it. As you can see from the values above, you can achieve convergence somewhere between 0.5 – 3 seconds realistically depending on how aggressively you tune your timers.

After reading this post you should have a better understanding of multicast, the RIB, MRIB and the unicast routing table impact on multicast flows.

Using EEM to Speed up Multicast Convergence when Receiver is Dually Connected

When deploying PIM ASM, the Designated Router (DR) role plays a significant part in how PIM ASM works. The DR on a segment is responsible for registering mulicast sources with the Rendezvous Point (RP) and/or sending PIM Joins for the segment. Routers with PIM enabled interfaces send out PIM Hello messages every 30 seconds by default.

After missing three Hellos the secondary router will take over as the DR. With the standard timer value, this can take between 60 to 90 seconds depending on when the last Hello came in. Not really acceptable in a modern network.

The first thought is to lower the PIM query interval, this can be done and it supports sending PIM Hellos at msec level. In my particular case I needed convergence within two seconds. I tuned the PIM query interval to 500 msec meaning that the PIM DR role should converge within 1.5 seconds. The problem though is that these Hellos are sent at process level. Even though my routers were barely breaking a sweat CPU wise I would see PIM adjacencies flapping.

The answer to my problems would be to have Bidirectional Forwarding Dectection (BFD) for PIM but it’s only supported on a very limited set of platforms. I already have BFD running for OSPF and BGP but unfortunately it’s not supported for PIM. The advantage of BFD is that the Hellos are more light weight and they are sent through interrupt instead of process level. This provides more deterministic behavior than than the regular PIM Hellos.

So how did I solve my problem? I need something that detects failure, I need BFD. Hot Standby Routing Protocol (HSRP) detects failures, HSRP has support for BFD. I could then use HSRP to detect the failure and act on the Syslog message generated by HSRP. Even though I didn’t really need HSRP on that segment it helped me in moving the PIM DR role which I wrote this Embedded Event Manager (EEM) applet for. A thank you to Peter Paluch for providing this idea and support 🙂

The configuration of the interface is this:

interface GigabitEthernet0/2.100 description *** Receiver LAN *** encapsulation dot1Q 100 ip address 10.0.100.3 255.255.255.0 no ip redirects no ip unreachables no ip proxy-arp ip pim sparse-mode standby version 2 standby 1 ip 10.0.100.1 standby 1 preempt delay reload 180 standby 1 name HSRP-1 bfd interval 300 min_rx 300 multiplier 3

BFD is sending Hellos every 300 msec so it will converge within 900 msec. The key is then to find the Syslog message that HSRP generates when it detects a failure. These messages look like this:

%HSRP-5-STATECHANGE: GigabitEthernet0/2.100 Grp 1 state Standby -> Active %HSRP-5-STATECHANGE: GigabitEthernet0/2.100 Grp 1 state Speak -> Standby

It is then possible to write an EEM applet acting on this message and setting the DR priority on the secondary router.

event manager applet CHANGE-DR-UP-RECEIVER event syslog pattern "%HSRP-5-STATECHANGE: GigabitEthernet0/2.100 Grp 1 state Standby -> Active" action 1.0 syslog msg "Changing DR on interface Gi0/2.100 due to AR is DOWN" action 1.1 cli command "enable" action 1.2 cli command "conf t" action 1.3 cli command "interface gi0/2.100" action 1.4 cli command "ip pim dr-priority 100" action 1.5 cli command "end"

When HSRP has detected the failure, the EEM apple will trigger very quickly and set the priority.

116072: Nov 20 13:03:04.544 UTC: %HSRP-5-STATECHANGE: GigabitEthernet0/2.100 Grp 1 state Standby -> Active 116080: Nov 20 13:03:04.552 UTC: %HA_EM-6-LOG: CHANGE-DR-UP-RECEIVER : DEBUG(cli_lib) : : CTL : cli_open called. 116120: Nov 20 13:03:04.604 UTC: PIM(0): Changing DR for GigabitEthernet0/2.100, from 10.0.100.2 to 10.0.100.3 (this system) 116121: Nov 20 13:03:04.604 UTC: %PIM-5-DRCHG: DR change from neighbor 10.0.100.2 to 10.0.100.3 on interface GigabitEthernet0/2.100

It took 60 msec from HSRP detecting the failure through BFD until the DR role had converged. It’s then possible to recover from a failure within a second.

It’s also important to set the DR priority back after the network converges. We use another applet for this:

event manager applet CHANGE-DR-DOWN-RECEIVER event syslog pattern "%HSRP-5-STATECHANGE: GigabitEthernet0/2.100 Grp 1 state Speak -> Standby" action 1.0 syslog msg "Changing DR on interface Gi0/2.100 due to AR is UP" action 1.1 cli command "enable" action 1.2 cli command "conf t" action 1.3 cli command "interface gi0/2.100" action 1.4 cli command "no ip pim dr-priority 100" action 1.5 cli command "end"

This works very well. There are some considerations when running EEM. Firstly, if you are running AAA then the EEM applet will fail authorization. This can be bypassed with the following command:

event manager applet CHANGE-DR-UP-RECEIVER authorization bypass

It’s also important to note that the EEM applet will use a VTY line when executing so make sure that there are available VTY’s when the applet runs.

After the PIM DR role has converged, the router will send out a PIM Join and the multicast will start flowing to the receiver.

Lessons Learned from Deploying Multicast

Lately I have been working a lot with multicast, which is fun and challenging! Even if you have a good understanding of multicast unless you work on it a lot there may be some concepts that fall out of memory or that you only run into in real life and not in the lab. Here is a summary of some things I’ve noticed so far.

PIM Register

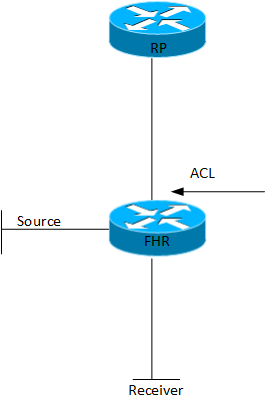

PIM Register are control plane messages sent from the First Hop Router (FHR) towards the Rendezvous Point (RP). These are unicast messages encapsulating the multicast from the multicast source. There are some considerations here, firstly because these packets are sent from the FHR control plane to the RP control plane, they are not subject to any access list configured outbound on the FHR. I had a situation where I wanted to route the multicast locally but not send it outbound.

Even if the ACL was successful, care would have to be taken to not break the control plane between the FHR and the RP or all multicast traffic for the group would be at jeopardy.

The PIM Register messages are control plane messages, this means that the RP has to process them in the control plane which will tax the CPU. Depending on the rate that the FHR is sending to the RP and the number of sources, this can be very stressful on the CPU. As a safeguard the following command can be implemented:

ip pim register-rate-limit 20000

This command is applied on FHRs and limits the rate of the PIM Register messages to 20 kbit/s. By default there is no limit, set it to something that makes sense in your environment.

Storm Control

If you have switches in your multicast environement, and most likely you will, implement storm control. If a loop forms you don’t want to have an unlimited amount of broadcast and multicast flooding your layer 2 domain. Combined with the PIM Register this can be a real killer for the control plane if your FHR is trying to register sources at a very high packet rate.

storm-control broadcast level pps 100 storm-control multicast level pps 1k

The above is just an example, you have to set it to something that fits your environment, make sure to leave some room for more traffic than expected but not enough to hurt your devices if somethings goes wrong.

S,G Timeout

PIM Any Source Multicast (ASM) relies on using a RP when setting up the flow between the multicast sender and receiver. The receiver will first join the (*,G) tree which is rooted at the RP. After the receiver learns of the source it can switch over to the source tree (S,G). The (S,G) mroute in the Multicast Routing Information Base (MRIB) has a standard lifetime of 180 seconds. It can be beneficial to raise this timeout depending on the topology. Look at the following topology:

If something happens to the source making it go away for three minutes, the (S,G) state will time out. Let’s then say that the source comes back but the RP is not available, then the FHR will not be able to register the source and no traffic can flow between the source and the receiver. If a higher timeout was configured for the (S,G) then the traffic would start flowing again when the source came back online. It’s not a very common scenario but can be a reasonable safe guard for important multicast groups. The drawback of configuring is that you will keep state for a longer time even if it is not needed.

ip pim sparse sg-expiry-timer <value>

The maximum timeout is 57600 seconds which is 16h. Setting it to a couple of hours may cut you some slack if something happens to the RP. Be careful if you have a lot of groups running though.

These are some important aspects but certainly not all. What lessons have you learned from deploying multicast?

Potential Issues with Multicast within a VLAN Spanning Switches

Background

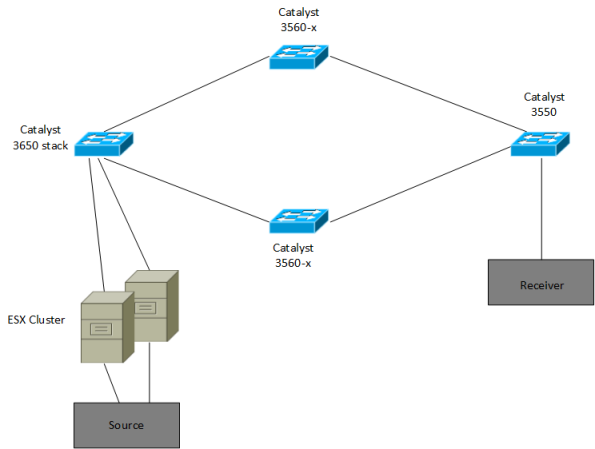

I ran into an interesting issue yesterday at work. There is a new video system

being installed, which takes the video output from computers, encodes it and

sends it as multicast to a controller. The controller then displays it on

a video wall. I had been told that the network has to support multicast.

As all the devices were residing in the same VLAN, I did not expect any issues.

However, the system was not able to receive the multicast. At first we expected

it could be the virtual environment and that the vSwitch did not support multicast,

because one server was deployed on the ESX cluster. The topology was this:

Multicast at Layer 2

Before describing the issue, let’s think about how multicast at layer 2 works.

The source will send to a multicast destination IP. This IP is the converted to a

destination MAC address. If the group is 227.0.0.1, this would map to the MAC

address 0100.5e00.0001. Switches forward multicast and broadcast frames to all

ports in a VLAN. This is not effective in the case of multicast as the traffic

may not have been requested by the host connected to a port receiving the

traffic.

IGMP Snooping

IGMP is the protocol used by hosts to indicate that they are interested in

receiving traffic for a multicast group. Cisco switches will by default run a

feature called IGMP snooping. Instead of forwarding multicast traffic to all ports

in the VLAN, the switch will only forward it to the ports that are interested in

receiving it. This works by “snooping” on the IGMP reports from the hosts, if the

switch sees an IGMP report on a port for a group, it knows that the host is interested

in receiving the traffic. The switch will then only forward that traffic to ports

where interested hosts reside. This is a nice feature but it can cause issues

if running multicast in the same VLAN between different switches.

The Issue

Going back to the topology at the start, the source is now sending multicast

traffic into the VLAN. The receiver has sent an IGMP report, because it wants

to receive the traffic.

The multicast traffic from the source reaches the 3650 stack but never leaves,

keeping the traffic from passing through the other switches to the receiver.

Why? The switches are running IGMP snooping, when the Catalyst 3550 at the far

right receives the IGMP report, it adds the port of receiver to receive traffic

for the multicast group. It does not however forward the IGMP report.

This means that the 3650 stack does not know that there are receivers that want

to receive this multicast traffic. The 3650 stack has no entry for the multicast

group in its snooping table. The traffic is essentially black holed.

The Fix

To understand the fix, you must first know about the mrouter port. The mrouter port

is a port leads to a multicast enabled router. For multicast traffic to pass through

IGMP snooping enabled switches, there must be a mrouter port. This port can be

statically assigned or can be dynamically learned. When the switch has a mrouter

port it will forward some of the IGMP reports out the mrouter port, which means that

the IGMP reports will reach the switch where the source is located. Not all reports

must be relayed, it’s enough that the switch learns that there are receivers out there.

In my case I configured the 3650 stack to be an IGMP querier.

SW-1#sh run | i querier ip igmp snooping querier

This is a dynamic feature that when configured on a switch it considers itself

to be a mrouter, acting as a proxy instead of a router. It will send out general

queries and when the other switches sees this query, they will learn that as a

mrouter port.

SW-3#show ip igmp snooping mrouter Vlan ports ---- ----- 10 Gi0/1(dynamic)

After turning on the IGMP querier feature, the issue was solved.

I hope this post can help someone in case you have issues with multicast in a

switched environment. There is an excellent post about it here from Cisco.

More on SSM

As you’ve noticed I’ve been studying SSM and what better way to learn than to blog about it. I recently got a Safari subscription which has been great so far. I’ve been reading some in the book Interdomain Multicast Routing: Practical Juniper Networks and Cisco Systems Solutions which has been great so far.

We are still using the same topology and now we will look a bit more in detail what is happening.

R1 will be the source, sending traffic from its loopback. R3 will be the client running IGMPv3 on its upstream interface to R2. As explained in previous post I am doing this to simulate an end host otherwise I would configure it on R3 downstream interface and then it would sen a PIM Join upstream.

To run SSM we need IGMPv3 or use some form of mapping as described in previous post. It is important to note though that IGMPv3 is not specific for SSM. With SSM a (S,G) pair is described as a channel. Instead of join/leave it is now called subscribe and unsubscribe.

So the first thing that happens is that the client (computer or STB) sends IGMPv3 membership report to the destination IP 224.0.0.22. This is the IP used for IGMPv3. This is how the packet looks in Wireshark.

The destination IP is 224.0.0.22 which corresponds to the multicast MAC 01-00-5E-00-00-16. 16 in hex is 22 in decimal.

We clearly see that it is version 3 and the type is Membership Report 0x22. Number of group records show how many groups are being joined.

Then the actual group record is shown (225.0.0.1) and the type is Allow New Sources. The number of sources is 1. And then we see the channel (S,G) that is joined.

Then R2 sends a PIM Join towards the source.

We can see that it is a (S,G) join. The SPT is built.

R2 will send general IGMPv3 queries to see if there are still any receivers connected to the LAN segment.

The query is sent to all multicast hosts (224.0.0.1) and if still receiving the multicast the host will reply with a report.

The type is Membership Query (0x11). The Max Response Time is 10 seconds which is the time that the host has to reply within.

We can see in this report that the record type is Mode is include (1) compared to Allow New Sources when the first report was sent.

Now R3 unsubscribes to the channel and the IGMP report is used once again.

The type is now Block Old Sources (6).

After this has been sent the IGMP querier (router) has to make sure that there are no other subscribers to the channel so it sends out a channel specific query.

If noone responds to this the router will send a PIM Prune upstream as can be seen here.

Finally. How can we see which router is the IGMP querier? Use the show ip igmp interface command.

R2#show ip igmp interface fa0/0

FastEthernet0/0 is up, line protocol is up

Internet address is 23.23.23.2/24

IGMP is enabled on interface

Current IGMP host version is 3

Current IGMP router version is 3

IGMP query interval is 60 seconds

IGMP querier timeout is 120 seconds

IGMP max query response time is 10 seconds

Last member query count is 2

Last member query response interval is 1000 ms

Inbound IGMP access group is not set

IGMP activity: 2 joins, 1 leaves

Multicast routing is enabled on interface

Multicast TTL threshold is 0

Multicast designated router (DR) is 23.23.23.2 (this system)

IGMP querying router is 23.23.23.2 (this system)

Multicast groups joined by this system (number of users):

224.0.1.40(1)

We can see some interesting things here. We can see which router is the designated router and IGMP querier. By default the IGMP querier is the router with the lowest IP and the DR is the one with highest IP. DR can be affected by chancing the DR priority. We can also see which timers are used for query interval and max response time among other timers.

So by now you should have a good grasp of SSM. It does not have a lot of moving parts which is nice.

Multicast – SSM mapping

This is a followup post to the first one on SSM. The topology is still the same.

If you want to find it in the documentation it is found in the IGMP configuration guide

I guess the reason to place it under IGMP is that SSM requires IGMPv3. To find SSM mapping go to Products-> Cisco IOS and NX-OS Software-> Cisco IOS-> Cisco IOS Software Release 12.4 Family-> Cisco IOS Software Releases 12.4T-> Configure-> Configuration Guides-> IP Multicast Configuration Guide Library, Cisco IOS Release 12.4T-> IP Multicast: IGMP Configuration Guide, Cisco IOS Release 12.4T-> SSM mapping

So why would we use SSM mapping in the first place? IGMPv3 is not supported everywhere yet. Maybe the Set Top Box (STB) is not supporting IGMPv3 but your ISP wants to support SSM. Then some transition mechanism must be used. There are a few options available like IGMPv3 lite, URD and SSM mapping. IGMPv3 lite is daemon running on the host supporting a subset of IGMPv3 until proper IGMPv3 has been implemented. With URD a router intercepts the URL requests from the user and the router joins the multicast stream to the correct source even though the user is not sending IGMPv3 reports. This requires that the multicast group and source is coded into the web page with links to the multicast streams.

SSM mapping takes IGMPv2 reports and convert them to IGMPv3. We can either use a DNS server and query it for sources or use static mappings as I will explain here. Static mapping is done on the Last Hop Router (LHR) and it is fairly simple. This is how we configure it.

R2(config)#access-list 2 permit 225.0.0.100 R2(config)#ip igmp ssm-map enable R2(config)#ip igmp ssm-map static 2 1.1.1.1 R2(config)#no ip igmp ssm-map query dns

The config is pretty self explanatory. First we create an access-list that defines the groups to be used for SSM mapping. Then we enable SSM mapping. Then we tie together the ACL with the sources that are allowed to send to those groups. Now we need to verify the mapping. First we take a look at R2 with show ip igmp ssm-mapping.

R2#show ip igmp ssm-mapping SSM Mapping : Enabled DNS Lookup : Disabled Mcast domain : in-addr.arpa Name servers : 255.255.255.255

Looks good so far. We will use R3 to simulate a client joining to 225.0.0.100 via IGMPv2. We will debug IGMP to see the report coming in. R3 will join the group via the igmp join-group command. One thing is important to note here. Usually we configure ip igmp-join group on downstream interface to simulate LAN segment and then PIM Join is sent upstream. In this case we want only IGMP join to be sent so therefore we configure the igmp join-group on the upstream interface. Also no PIM should be enabled. This makes the router act as a pure host and not do any multicast routing. What would happen otherwise is that the router will have RPF failures when the source is sending traffic because for traffic not in SSM mode a RPF lookup is done against the RP. Since no RP is configured the RPF would fail, as a workaround we can configure a static RP even though it isn’t really used it would make the RPF check pass.

R3(config)int fa0/0 R3(config-if)#ip igmp join-group 225.0.0.100

This is the debug output from R3.

IGMP(0): Send v2 Report for 225.0.0.100 on FastEthernet0/0

We can clearly see that IGMPv2 report was sent. Now we go to R2 to see if it is converting the IGMPv2 join to IGMPv3.

IGMP(0): Received v2 Report on FastEthernet0/0 from 23.23.23.3 for 225.0.0.100 IGMP(0): Convert IGMPv2 report (*, 225.0.0.100) to IGMPv3 with 1 source(s) using STATIC

It is clear that the conversion is taking place. We look in the MRIB as well.

R2#

sh ip mroute | be \(

(*, 224.0.1.40), 03:18:48/00:02:54, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

FastEthernet0/0, Forward/Sparse, 03:18:48/00:02:54

Serial0/0, Forward/Sparse, 03:18:48/00:02:44

(1.1.1.1, 225.0.0.100), 03:18:26/00:02:57, flags: sTI

Incoming interface: Serial0/0, RPF nbr 12.12.12.1

Outgoing interface list:

FastEthernet0/0, Forward/Sparse, 03:18:26/00:02:57

We see that we now have (S,G) joins in R2! As a final step we will also verify in R1.

sh ip mroute | be \(

(*, 224.0.1.40), 03:20:44/stopped, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Serial0/1, Forward/Sparse, 03:20:44/00:00:49

(*, 225.0.0.100), 03:20:43/stopped, RP 0.0.0.0, flags: SP

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list: Null

(1.1.1.1, 225.0.0.100), 00:01:01/00:02:28, flags: T

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Serial0/0, Forward/Sparse, 00:01:01/00:03:27

Now the ping should be successful.

R1#ping Protocol [ip]: Target IP address: 225.0.0.100 Repeat count [1]: 5 Datagram size [100]: Timeout in seconds [2]: Extended commands [n]: y Interface [All]: serial0/0 Time to live [255]: Source address: 1.1.1.1 Type of service [0]: Set DF bit in IP header? [no]: Validate reply data? [no]: Data pattern [0xABCD]: Loose, Strict, Record, Timestamp, Verbose[none]: Sweep range of sizes [n]: Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 225.0.0.100, timeout is 2 seconds: Packet sent with a source address of 1.1.1.1 Reply to request 0 from 23.23.23.3, 16 ms Reply to request 1 from 23.23.23.3, 16 ms Reply to request 2 from 23.23.23.3, 16 ms Reply to request 3 from 23.23.23.3, 16 ms Reply to request 4 from 23.23.23.3, 16 ms

So the important thing here is to make R3 act as a pure host otherwise it will not work. This is a bit overkill for verification but I just wanted to show how it could be done.

Source Specific Multicast (SSM) and IGMP filtering

Regular multicast is known as Any Source Multicast (ASM). It is based on a many to many

model where the source can be anyone and only the group is known. For some applications

like stock trading exchange this is a good choice but for IPTV usage it makes more

sense to use SSM as it will scale better when there is no need for a RP.

ASM builds a shared tree (RPT) from the receiver to the RP and a

Shortest Path Tree (SPT) from the sender to the RP. Everything must pass through the RP

until switching over to the SPT building a tree directly from receiver to sender.

The RPT uses a (*,G) entry and the SPT uses a (S,G) entry in the MRIB.

SSM uses no RP, instead it uses IGMP version 3 to signal what channel (source) it wants

to join for a group. IGMPv3 can use INCLUDE messages that specify that only these

sources are allowed or they can use EXCLUDE to allow all sources except for these ones.

SSM has the IP range 232.0.0.0/8 allocated and it is the default range in IOS but we can

also use SSM for other IP ranges. If we do we need to specify that with an ACL.

SSM can be enabled on all routers that should work in SSM mode but it is only

really needed on the routers that have receivers connected since that is the place

where the behavior is really changed. Instead of sending a (*,G) join to the RP

the Last Hop Router (LHR) sends a (S,G) join directly to the source.

This is the topology we are using.

It is really simple. R1 is acting as a multicast source and R2 will both simulate a client

and do filtering. R3 will simulate an end host. R1 will source the traffic from its loopback.

OSPF has been enabled on all relevant interfaces.

We will start by enabling SSM for the range 225.0.0.0/24 on R2.

R2#conf t Enter configuration commands, one per line. End with CNTL/Z. R2(config)#access-list 1 permit 225.0.0.0 0.0.0.255 R2(config)#ip pim ssm range 1

R2 will now use SSM behavior for the 225.0.0.0/24 range. R2 will join the group 225.0.0.1.

We will debug IGMP and PIM to follow everything that happens. When using igmp join-group

on an interface the router simulates IGMP report coming in on that interface. We will see

later why this is important. So first we enable debugging to the buffer.

Also we must enable multicast routing and enable PIM sparse-mode on the relevant interfaces.

R1#conf t Enter configuration commands, one per line. End with CNTL/Z. R1(config)#ip multicast-routing R1(config)#int s0/0 R1(config-if)#ip pim sparse-mode R1(config-if)#do debug ip pim PIM debugging is on R1(config-if)#

R2(config)#ip multicast-routing R2(config)#int s0/0 R2(config-if)#ip pim sparse-mode R2(config-if)#int f0/0 R2(config-if)#ip pim sparse-mode R2(config-if)#ip igmp version 3 R2(config-if)# *Mar 1 00:18:37.595: %PIM-5-DRCHG: DR change from neighbor 0.0.0.0 to 23.23.23.2 on interface FastEthernet0/0 R2(config-if)#do debug ip igmp IGMP debugging is on R2(config-if)#do debug ip pim PIM debugging is on

Then we join the group on the Fa0/0 interface and look at what happens.

R2(config)#int f0/0 R2(config-if)#ip igmp join-group 225.0.0.1 source 1.1.1.1

We take a look at the log.

IGMP(0): Received v3 Report for 1 group on FastEthernet0/0 from 23.23.23.2 IGMP(0): Received Group record for group 225.0.0.1, mode 5 from 23.23.23.2 for 1 sources IGMP(0): Updating expiration time on (1.1.1.1,225.0.0.1) to 180 secs IGMP(0): Setting source flags 4 on (1.1.1.1,225.0.0.1) IGMP(0): MRT Add/Update FastEthernet0/0 for (1.1.1.1,225.0.0.1) by 0 PIM(0): Insert (1.1.1.1,225.0.0.1) join in nbr 12.12.12.1's queue IGMP(0): MRT Add/Update FastEthernet0/0 for (1.1.1.1,225.0.0.1) by 4 PIM(0): Building Join/Prune packet for nbr 12.12.12.1 PIM(0): Adding v2 (1.1.1.1/32, 225.0.0.1), S-bit Join PIM(0): Send v2 join/prune to 12.12.12.1 (Serial0/0) IGMP(0): Building v3 Report on FastEthernet0/0 IGMP(0): Add Group Record for 225.0.0.1, type 5 IGMP(0): Add Source Record 1.1.1.1 IGMP(0): Add Group Record for 225.0.0.1, type 6

R2 is receiving an IGMP report (created by itself) and then it generates a PIM join and

sends it to R1. We look how R1 is receiving it.

PIM(0): Received v2 Join/Prune on Serial0/0 from 12.12.12.2, to us PIM(0): Join-list: (1.1.1.1/32, 225.0.0.1), S-bit set PIM(0): RPF Lookup failed for 1.1.1.1 PIM(0): Add Serial0/0/12.12.12.2 to (1.1.1.1, 225.0.0.1), Forward state, by PIM SG Join

Then we verify by looking at the mroute table and by pinging.

R1#sh ip mroute 225.0.0.1 | be \(

(*, 225.0.0.1), 00:09:42/stopped, RP 0.0.0.0, flags: SP

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list: Null

(1.1.1.1, 225.0.0.1), 00:01:49/00:01:40, flags: T

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Serial0/0, Forward/Sparse, 00:01:49/00:02:39

Now we do a regular ping which should fail since we are not sourcing traffic from the loopback.

R1#ping 225.0.0.1 re 3 Type escape sequence to abort. Sending 3, 100-byte ICMP Echos to 225.0.0.1, timeout is 2 seconds: ...

This is expected and what is good about SSM is that it makes sending to groups from any

source more difficult which is good from a security perspective.

Now we do an extended ping and source from the loopback.

R1#ping Protocol [ip]: Target IP address: 225.0.0.1 Repeat count [1]: 5 Datagram size [100]: Timeout in seconds [2]: Extended commands [n]: y Interface [All]: serial0/0 Time to live [255]: Source address: 1.1.1.1 Type of service [0]: Set DF bit in IP header? [no]: Validate reply data? [no]: Data pattern [0xABCD]: Loose, Strict, Record, Timestamp, Verbose[none]: Sweep range of sizes [n]: Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 225.0.0.1, timeout is 2 seconds: Packet sent with a source address of 1.1.1.1 Reply to request 0 from 12.12.12.2, 52 ms Reply to request 1 from 12.12.12.2, 48 ms Reply to request 2 from 12.12.12.2, 48 ms Reply to request 3 from 12.12.12.2, 36 ms Reply to request 4 from 12.12.12.2, 40 ms

So our SSM is working and we didn’t even have to enable it on R1! What if we have

clients not supporting IGMPv3? Then we could do SSM mapping. I could do that in

another post if there is interest for it. For now lets look at filtering. If we

were using ASM then we use a standard ACL and match which multicast groups are

allowed to send joins for. The joins would be (*,G) which is the same as

host 0.0.0.0 in an ACL.

To filter SSM we use an extended ACL where the source in the extended ACL

is the multicast source and the destination is which group to match. We will

create an ACL permitting 1.1.1.1 as source for the groups 225.0.0.1, 225.0.0.2

and 225.0.0.3. Anything else will be denied which we will see by debugging IGMP.

When we are doing filtering it is important to rembember that the IGMP report

generated by the router itself (igmp join-group) will also be subject to the ACL

so make sure to include that.

R2(config)#ip access-list extended IGMP_FILTER R2(config-ext-nacl)#permit igmp host 1.1.1.1 host 225.0.0.1 R2(config-ext-nacl)#permit igmp host 1.1.1.1 host 225.0.0.2 R2(config-ext-nacl)#permit igmp host 1.1.1.1 host 225.0.0.3 R2(config-ext-nacl)#deny igmp any any R2(config-ext-nacl)#int f0/0 R2(config-if)#ip igmp access-group IGMP_FILTER

Now we make R3 join a group not allowed and look at the debug output on R2.

R3(config)#int f0/0 R3(config-if)#ip igmp version 3 R3(config-if)#ip igmp join-group 225.0.0.10 source 1.1.1.1

This is from the log on R2.

IGMP(0): Received v3 Report for 1 group on FastEthernet0/0 from 23.23.23.3

IGMP(*): Source: 1.1.1.1, Group 225.0.0.10 access denied on FastEthernet0/0

R2#sh ip access-lists IGMP_FILTER

Extended IP access list IGMP_FILTER

10 permit igmp host 1.1.1.1 host 225.0.0.1 (6 matches)

20 permit igmp host 1.1.1.1 host 225.0.0.2

30 permit igmp host 1.1.1.1 host 225.0.0.3

40 deny igmp any any (7 matches)

As we can see that group is not allowed so the IGMP join will not make it through.

SSM can be very useful and it is not difficult to setup. In fact it is mostly

easier than ASM to setup.

Followup on multicast helper map

I had some requests for the final configs so I have fixed those. You can download them here. Also I had some issues getting the traffic through but thanks to my helpful readers like zumzum I now have it figured out.

Lets start on R4 since this is the source of the traffic.

R4 wants to send traffic to 1.1.1.1 with a source of 155.1.12.4. We know that route via RIP and the next-hop is 155.1.12.1. That network is directly connected (secondary). We need to find out the MAC address of 155.1.12.1 for our ARP entry. R3 has proxy arp enabled, which is the default. However it will not respond to R4 ARP request since it does not have the subnet 155.1.12.0/24 connected. R4 must therefore have a static ARP entry. I did an error here earlier by typing in R1 MAC but this should be the MAC of R3 Fa0/0 since that is the link connecting us. We create the static ARP with arp 155.1.12.1 xxxx.xxxx.xxxx arpa. R4 now has all the info needed.

Packet travels to R3. R3 does not have a route for 1.1.1.1. We create a static route, ip route 1.1.1.0 255.255.255.0 155.1.23.2. We also need a static route back to R4 for the 155.1.12.4 IP. Ip route 155.1.12.4 255.255.255.0 155.1.34.4.

Packet now travels to R2. R2 also needs to know about 1.1.1.1 so we add a route there as well. Ip route 1.1.1.0 255.255.255.0 155.1.12.1. R2 also needs to find its way back to R4 so we add a static route, ip route 155.1.12.4 255.255.255.255 155.1.23.2.

Packet goes to R1 which will respond. It will send the packet out Fa0/0. R1 needs to know the MAC address for 155.1.12.4. R2 has proxy ARP enabled so it will reply with its own MAC address to R1. R1 will insert this into ARP cache and adjacency table and then we are good to go.

So except learning a multicast feature we also got to practice how to make connectivitiy in an unusual way and think through the whole process of packet flow.