Archive

Design Considerations for North/South Flows in the Data Center

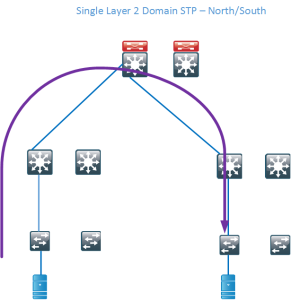

Traditional data centers have been built by using standard switches and running Spanning Tree (STP). STP blocks redundant links and builds a loop-free tree which is rooted at the STP root. This kind of topology wastes a lot of links which means that there is a decrease in bisectional bandwidth in the network. A traditional design may look like below where the blocking links have been marked with red color.

If we then remove the blocked links, the tree topology becomes very clear and you can see that there is only a single path between the servers. This wastes a lot of bandwidth and does not provide enough bisectional bandwidth. Bisectional bandwidth is the bandwidth that is available from the left half of the network to the right half of the network.

The traffic flow is highlighted below.

Technologies like FabricPath (FP) or TRILL can overcome these limitations by running ISIS and building loop-free topologies but not blocking any links. They can also take advantage of Equal Cost Multi Path (ECMP) paths to provide load sharing without doing any complex VLAN manipulations like with STP. A leaf and spine design is most commonly used to provide for a high amount of bisectional bandwidth.

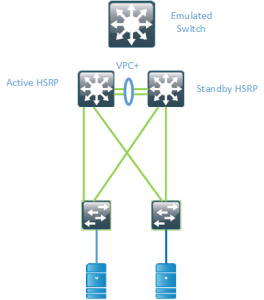

Hot Standby Routing Protocol (HSRP) has been around for a long time providing First Hop Redundancy (FHR) in our networks. The drawback of HSRP is that there is only one active forwarder. So even if we run a layer 2 multipath network through FP, for routed traffic flows, there will only be one active path.

The reason for this is that FP advertsises its Switch ID (SID) and that the Virtual MAC (vMAC) will be available behind the FP switch that is the HSRP active device. Switched flows can still use all of the available bandwidth.

To overcome this, there is the possibility of running VPC+ between the switches and having the switches advertise an emulated SID, pretending to be one switch so that the vMAC will be available behind that SID.

There are some drawbacks to this however. It requires that you run VPC+ in the spine layer and you can still only have 2 active forwarders. if you have more spine devices they will not be uitilized for Nort/South flows. To overcome this there is a feature called Anycast HSRP.

Anycast HSRP works in a similar way by advertising a virtual SID but it does not require links between the spines or VPC+. It also supports up to 4 active forwarders currently which provides for double the bandwidth compared to VPC+

Modern data centers provide for a lot more bandwidth and bisectional bandwidth than previous designs, but you still need to consider how routed flows can utilize the links in your network. This post should give you some insights on what to consider in such a scenario.