Archive

Introduction to Storage Networking and Design

Introduction

Storage and storage protocols are not generally well known by network engineers. Networking and storage have traditionally been two silos. Modern networks and data centers are looking to consolidate these two networks into one and to run them on a common transport such as Ethernet.

Hard Disks and Types of Storage

Hard disks can use different type of connectors and protocols.

- Advanced Technology Attachment (ATA)

- Serial ATA (SATA)

- Fibre Channel (FC)

- Small Computer System Interface (SCSI)

- Serial Attached SCSI (SAS)

ATA and SATA and SCSI are older standards, newer disks will typically use SATA or SAS where SAS is more geared towards the enterprise market. FC is used to attach to Storage Area Network (SAN)

Storage can either be file-level storage or block-level storage. File-level storage provides access to a file system through protocols such as Network File System (NFS) or Common Internet File System (CIFS). Block-level storage can be seen as raw storage that does not come with a file system. Block-level storage presents Logical Unit Number (LUN) to servers and the server may then format that raw storage with a file system. VmWare uses VmWare File System (VMFS) to format raw devices.

DAS, NAS and SAN

Storage can be accessed in different ways. Directly Attached Storage (DAS) is storage that is attached to a server, it may also be described as captive storage. There is no efficient sharing of storage and can be complex to implement and manage. To be able to share files the storage needs to be connected to the network. Network Attached Storage (NAS) enables the sharing of storage through the network and protocols such as NFS and CIFS. Internally SCSI and RAID will commonly be implemented. Storage Area Network (SAN) is a separate network that provides block-level storage as compared to the NAS that provides file-level storage.

Virtualization of Storage

Everything is being abstracted and virtualized these days, storage is no exception. The goal of anything being virtualized is to abstract from the physical layer and to provide a better utilization and less/no downtime when making changes to the storage system. It is also key in scaling since direct attached storage will not scale well. It also helps in decreasing the management complexity if multiple pools of storage can be accessed from one management tool. One basic form of virtualization is creating virtual disks that use a subset of the storage available on the physical device such as when creating a virtual machine in VmWare or with other hypervisors.

Virtualization exists at different levels such as block, disk, file system and file virtualization.

One form of file system virtualization is the concept of NAS where the storage is accessed through NFS or CIFS. The file system is shared among many hosts which may be running different operating systems such as Linux and Windows.

Block level storage can be virtualized through virtual disks. The goal of virtual disks is to make them flexible, being able to increase and decrease in size, provide as fast storage as needed and to increase the availability compared to physical disks.

There are also other forms of virtualization/abstracting where several LUNs can be hidden behind another LUN or where virtual LUNs are sliced from a physical LUN.

Storage Protocols

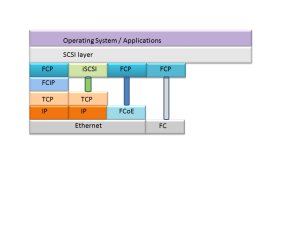

There are a number of protocols available for transporting storage traffic. Some of them are:

Internet Small Computer System Interface (iSCSI) – Transports SCSI requests over TCP/IP. Not suitable for high performance storage traffic

Fibre Channel Protocol (FCP) – It’s the interface protocol of SCSI on fibre channel

Fibre Channel over IP (FCIP) – A form of storage tunneling or FC tunneling where FC information is tunneled through the IP network. Encapsulates the FC block data and transports it through a TCP socket

Fibre Channel over Ethernet (FCoE) – Encapsulating FC information into Ethernet frames and transporting them on the Ethernet network

Fibre Channel

Fibre channel is a technology to attach to and transfer storage. FC requires lossless transfer of storage traffic which has been difficult/impossible to provide on traditional IP/Ethernet based networks. FC has provided more bandwidth traditionally than Ethernet, running at speeds such as 8 Gbit/s and 16 Gbit/s but Ethernet is starting to take over the bandwidth race with speeds of 10, 40, 100 or even 400 Gbit/s achievable now or in the near future.

There are a lot of terms in Fibre channel which are not familiar for us coming from the networking side. I will go through some of them here:

Host Bus Adapter (HBA) – A card with FC ports to connect to storage, the equivalent of a NIC

N_Port – Node port, a port on a FC host

F_Port – Fabric port, port on a switch

E_Port – Expansion port, port connecting two fibre channel switches and carrying frames for configuration and fabric management

TE_Port – Trunking E_Port, Cisco MDS switches use Enhanced Inter Switch Link (EISL) to carry these frames. VSANs are supported with TE_Ports, carrying traffic for several VSANs over one physical link

World Wide Name (WWN) – All FC devices have a unique identity called WWN which is similar to how all Ethernet cards have a MAC address. Each N_Port has its own WWN

World Wide Node Name (WWNN) – A globally unique identifier assigned to each FC node or device. For servers and hosts, the WWNN is unique for each HBA, if a server has two HBAs, it will have two WWNNs.

World Wide Port Number (WWPN) – A unique identifier for each FC port of any FC device. A server will have a WWPN for each port of the HBA. A switch has WWPN for each port of the switch.

Initiator – Clients called initiators issues SCSI commands to request services from logical units on a server that is known as a target

Fibre channel has many similarities to IP (TCP) when it comes to communicating.

- Point to point oriented – facilitated through device login

- Similar to TCP session establishment

- N_Port to N_Port connection – logical node connection point

- Similar to TCP/UDP sockets

- Flow controlled – hop by hop and end-to-end basis

- Similar to TCP flow control but a different mechanism where no drops are allowed

- Acknowledged – For certain types of traffic but not for others

- Similar to how TCP acknowledges segments

- Multiple connections allowed per device

- Similar to TCP/UDP sockets

Buffer to Buffer Credits

FC requires lossless transport and this is achieved through B2B credits.

- Source regulated flow control

- B2B credits used to ensure that FC transport is lossless

- The number of credits is negotiated between ports when the link is brought up

- The number of credits is decremented with each packet placed on the wire

- Does not rely on packet size

- If the number of credits is 0, transmission is stopped

- Number of credits incremented when “transfer ready” message received

- The number of B2B credits needs to be taken into consideration as bandwidth and/or distance increases

Virtual SAN (VSAN)

Virtual SANs allow to utilize the physical fabric better, essentially providing the same functionality as 802.1Q does to Ethernet.

- Virtual fabrics created from a larger cost-effective and redundant physical fabric

- Reduces waste of ports of a SAN island approach

- Fabric events are isolated per VSAN, allowing for higher availability and isolation

- FC features can be configured per VSAN, allowing for greater versability

Fabric Shortest Path First (FSPF)

To find the best path through the fabric, FSPF can be used. The concept should be very familiar if you know OSPF.

- FSPF routes traffic based on the destination Domain ID

- For FSPF a Domain ID identifies a VSAN in a single switch

- The number of maximum switches supported in a fabric is then limited to 239

- FSPF

- Performs hop-by-hop routing

- The total cost is calculated to find the least cost path

- Supports the use of equal cost load sharing over links

- Link costs can be manually adjusted to affect the shortest paths

- Uses Dijkstra algorithm

- Runs only on E_Ports or TE_Ports and provides loop free topology

- Runs on a per VSAN basis

Zoning

To provide security in the SAN, zoning can be implemented.

- Zones are a basic form of data path security

- A bidirectional ACL

- Zone members can only “see” and talk to other members of the zone. Similar to PVLAN community port

- Devices can be members of several zones

- By default, devices that are not members of a zone will be isolated from other devices

- Zones belong to a zoneset

- The zoneset must be active to enforce the zoning

- Only one active zoneset per fabric or per VSAN

SAN Drivers

What are the drivers for implementing a SAN?

- Lower Total Cost of Ownership (TCO)

- Consolidation of storage

- To provide better utilization of storage resources

- Provide a high availability

- Provide better manageability

Storage Design Principles

These are some of the important factors when designing a SAN:

- Plan a network that can handle the number of ports now and in the future

- Plan the network with a given end-to-end performance and throughput level in mind

- Don’t forget about physical requirements

- Connectivity to remote data centers may be needed to meet the business requirements of business continuity and disaster recovery

- Plan for an expected lifetime of the SAN and make sure the design can support the SAN for its expected lifetime

Device Oversubscription and Consolidation

- Most SAN designs will have oversubscription or fan-out from the storage devices to the hosts.

- Follow guidelines from the storage vendor to not oversubscribe the fabric too heavily.

- Consolidate the storage but be aware of the larger failure domain and fate sharing

- VSANs enable consolidation while still keeping separate failure domains

When consolidating storage, there is an increased risk that all of the storage or a large part of it can be brought offline if the fabric or storage controllers fail. Also be aware that when using virtualization techniques such as vSANS, there is fate sharing because several virtual topologies use the same physical links.

Convergence and Stability

- To support fast convergence, the number of switches in the fabric should not be too large

- Be aware of the number of parallell links, a lot of links will increase processing time and SPF run time

- Implement appropriate levels of redundancy in the network layer and in the SAN fabric

The above guidelines are very general but the key here is that providing too much redundancy may actually decrease the availability as the Mean Time to Repair (MTTR) increases in case of a failure. The more nodes and links in the fabric the larger the link state database gets and this will lead to SPF runs taking a longer period of time. The general rule is that two links is enough and that three is the maximum, anything more than that is overdoing it. The use of portchannels can help in achieving redundancy while keeping the number of logical links in check.

SAN Security

Security is always important but in the case of storage it can be very critical and regulated by PCI DSS, HIPAA, SOX or other standards. Having poor security on the storage may then not only get you fired but behind bars so security is key when designing a SAN. These are some recommendations for SAN security:

- Use secure role-based management with centralized authentication, authorization and logging of all the changes

- Centralized authentication should be used for the networking devices as well

- Only authorized devices should be able to connect to the network

- Traffic should be isolated and secured with access controls so that devices on the network can send and receive data securely while being protected from other activities of the network

- All data leaving the storage network should be encrypted to ensure business continuane

- Don’t forget about remote vaulting and backup

- Ensure the SAN and network passes any regulations such as PCI DSS, HIPAA, SOX etc

SAN Topologies

There are a few common designs in SANs depending on the size of the organization. We will discuss a few of them here and their characteristics and strong/weak points.

Collapsed Core Single Fabric

In the collapsed core, both the iniator and the target are connected through the same device. This means all traffic can be switched without using any Inter Switch Links (ISL). This provides for full non-blocking bandwidth and there should be no oversubscription. It’s a simple design to implement and support and it’s also easy to manage compared to more advanced designs.

The main concern of this design is how redundant the single switch is. Does it provided for redundant power, does it have a single fabric or an extra fabric for redundancy? Does the switch have redundant supervisors? At the end of the day, a single device may go belly up so you have to consider the time it would take to restore your fabric and if this downtime is acceptable compared to a design with more redundancy.

Collapsed Core Dual Fabric

The dual fabric designs removes the Single Point of Failure (SPoF) of the single switch design. Every host and storage device is connected to both fabrics so there is no need for an ISL. The ISL would only be useful in case the storage device loses its port towards fabric A and the server loses its port towards fabric B. This scenario may not be that likely though.

The drawback compared to the single fabric is the cost of getting two of every equipment to create the dual fabric design.

Core Edge Dual Fabric

For large scale SAN designs, the fabric is divided into a core and edge part where the storage is connected to the edge of the fabric. This design is dual fabric to provide high availability. The storage and servers are not connected to the same device, meaning that storage traffic must pass the ISL links between the core and the edge. The ISL links must be able to handle the load so that the oversubscription ratio is not too high.

The more devices that get added to a fabric, the more complex it gets and the more devices you have to manage. For a large design you may not have many options though.

Fibre Channel over Ethernet (FCoE)

Maintaining one network for storage and one for normal user data is costly and complex. It also means that you have a lot of devices to manage. Wouldn’t it be better if storage traffic could run on the normal network as well? That is where FCoE comes into play. The FC frames are encapsulated into Ethernet frames and can be sent on the Ethernet network. However, Ethernet isn’t lossless, is it? That is where Data Center Bridging (DCB) comes into play.

Data Center Bridging (DCB)

Ethernet is not a lossless protocol. Some devices may have support for the use of PAUSE frames but these frames would stop all communication, meaning your storage traffic would come to a halt as well. There was no way of pausing only a certain type of traffic. To provide lossless transfer of frames, new enhancements to Ethernet had to be added.

Priority Flow Control (PFC)

- PFC is defined in 802.1Qbb and provides PAUSE based on 802.p CoS

- When link is congested, CoS assigned to “no-drop” will be paused

- Other traffic assigned to other CoS values will continue to transmit and rely on upper layer protocols for retransmission

- PFC is not limited to FCoE traffic

It is also desirable to be able to guarantee traffic a certain amount of the bandwidth available and to not have a class of traffic use up all the bandwidth. This is where Enhanced Transmission Selection (ETS) has its use.

Enhanced Transmission Selection (ETS)

- Defined in 802.1Qaz and prevents a single traffic class from using all the bandwidth leading to starvation of other classes

- If a class does not fully use its share, that bandwidth can be used by other classes

- Helps to accomodate for classes that have a bursty nature

The concept is very similar to doing egress queuing through MQC on a Cisco router.

We now have support for lossless Ethernet but how can we tell if a device has implemented these features? Through the use of Data Center Bridging eXchange (DCBX).

Data Center Bridging Exchange (DCBX)

- DCBX is LLDP with new TLV fields

- Negotiates PFC, ETS, CoS values between DCB capable devices

- Simplifies management because parameters can be distributed between nodes

- Responsible for logical link up/down signaling of Ethernet and Fibre Channel

What is the goal of running FCoE? What are the drivers for running storage traffic on our normal networks?

Unified Fabric

Data centers require a lot of cabling, power and cooling. Because storage and servers have required separate networks, a lot of cabling has been used to build these networks. With a unified fabric, a lot of cabling can be removed and the storage traffic can use the regular IP/Ethernet network,so that half of the number of cables are needed. The following are some reasons for striving for a unified fabric:

- Reduced cabling

- Every server only requires 2xGE or 2x10GE instead of 2 Ethernet ports and 2 FC ports

- Fewer access layer switches

- A typical Top of Rack (ToR) design may have two switches for networking and two for storage, two switches can then be removed

- Fewer network adapters per server

- A Converged Network Adapter (CNA) combines networking and storage functionality so that half of the NICs can be removed

- Power and cooling savings

- Less NICs, mean less power which then also saves on cooling. The reduced cabling may also improve the airflow in the data center

- Management integration

- A single network infrastructure and less devices to manage decreases the overall management complexity

- Wire once

- There is no need to recable to provide network or storage connectivity to a server

Conclusion

This post is aimed at giving the network engineer an introduction into storage. Traditionally there have been silos between servers, storage and networking people but these roles are seeing a lot of more overlap in modern networks. We will see networks be built to provide both for data and storage traffic and to provide less complex storage. Protocols like iSCSI may get a larger share of the storage world in the future and FCoE may become larger as well.

Next Generation Multicast – NG-MVPN

Introduction

Multicast is a great technology that although it provides great benefits, is seldomly deployed. It’s a lot like IPv6 in that regard. Service providers or enterprises that run MPLS and want to provide multicast services have not been able to use MPLS to provide multicast Multicast has then typically been delivered by using Draft Rosen which is a mGRE technology to provide multicast. This post starts with a brief overview of Draft Rosen.

Draft Rosen

Draft Rosen uses GRE as an overlay protocol. That means that all multicast packets will be encapsulated inside GRE. A virtual LAN is emulated by having all PE routers in the VPN join a multicast group. This is known as the default Multicast Distribution Tree (MDT). The default MDT is used for PIM hello’s and other PIM signaling but also for data traffic. If the source sends a lot of traffic it is inefficient to use the default MDT and a data MDT can be created. The data MDT will only include PE’s that have receivers for the group in use.

Draft Rosen is fairly simple to deploy and works well but it has a few drawbacks. Let’s take a look at these:

- Overhead – GRE adds 24 bytes of overhead to the packet. Compared to MPLS which typically adds 8 or 12 bytes there is 100% or more of overhead added to each packet

- PIM in the core – Draft Rosen requires that PIM is enabled in the core because the PE’s must join the default and or data MDT which is done through PIM signaling. If PIM ASM is used in the core, an RP is needed as well. If PIM SSM is run in the core, no RP is needed.

- Core state – Unneccessary state is created in the core due to the PIM signaling from the PE’s. The core should have as little state as possible

- PIM adjacencies – The PE’s will become PIM neighbors with each other. If it’s a large VPN and a lot of PE’s, a lot of PIM adjacencies will be created. This will generate a lot of hello’s and other signaling which will add to the burden of the router

- Unicast vs multicast – Unicast forwarding uses MPLS, multicast uses GRE. This adds complexity and means that unicast is using a different forwarding mechanism than multicast, which is not the optimal solution

- Inefficency – The default MDT sends traffic to all PE’s in the VPN regardless if the PE has a receiver in the (*,G) or (S,G) for the group in use

Based on this list, it is clear that there is a room for improvement. The things we are looking to achieve with another solution is:

- Shared control plane with unicast

- Less protocols to manage in the core

- Shared forwarding plane with unicast

- Only use MPLS as encapsulation

- Fast Restoration (FRR)

NG-MVPN

To be able to build multicast Label Switched Paths (LSPs) we need to provide these labels in some way. There are three main options to provide these labels today:

- Multipoint LDP(mLDP)

- RSVP-TE P2MP

- Unicast MPLS + Ingress Replication(IR)

MLDP is an extension to the familiar Label Distribution Protocol (LDP). It supports both P2MP and MP2MP LSPs and is defined in RFC 6388.

RSVP-TE is an extension to the unicast RSVP-TE which some providers use today to build LSPs as opposed to LDP. It is defined in RFC 4875.

Unicast MPLS uses unicast and no additional signaling in the core. It does not use a multipoint LSP.

Multipoint LSP

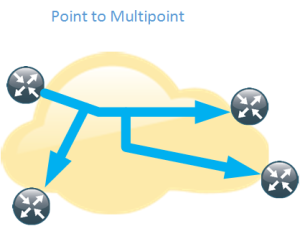

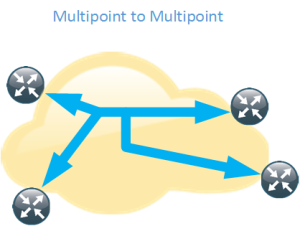

Normal unicast forwarding through MPLS uses a point to point LSP. This is not efficient for multicast. To overcome this, multipoint LSPs are used instead. There are two different types, point to multipoint and multipoint to multipoint.

- Replication of traffic in core

- Allows only the root of the P2MP LSP to inject packets into the tree

- If signaled with mLDP – Path based on IP routing

- If signaled with RSVP-TE – Constraint-based/explicit routing. RSVP-TE also supports admission control

- Replication of traffic in core

- Bidirectional

- All the leafs of the LSP can inject and receive packets from the LSP

- Signaled with mLDP

- Path based on IP routing

Core Tree Types

Depending on the number of sources and where the sources are located, different type of core trees can be used. If you are familiar with Draft Rosen, you may know of the default MDT and the data MDT.

Signalling the Labels

As mentioned previously there are three main ways of signalling the labels. We will start by looking at mLDP.

- LSPs are built from the leaf to the root

- Supports P2MP and MP2MP LSPs

- mLDP with MP2MP provides great scalability advantages for “any to any” topologies

- “any to any” communication applications:

- mVPN supporting bidirectional PIM

- mVPN Default MDT model

- If a provider does not want tree state per ingress PE source

- “any to any” communication applications:

- mLDP with MP2MP provides great scalability advantages for “any to any” topologies

- Supports Fast Reroute (FRR) via RSVP-TE unicast backup path

- No periodic signaling, reliable using TCP

- Control plane is P2MP or MP2MP

- Data plane is P2MP

- Scalable due to receiver driven tree building

- Supports MP2MP

- Does not support traffic engineering

RSVP-TE can be used as well with the following characteristics.

- LSPs are built from the head-end to the tail-end

- Supports only P2MP LSPs

- Supports traffic engineering

- Bandwidth reservation

- Explicit routing

- Fast Reroute (FRR)

- Signaling is periodic

- P2P technology at control plane

- Inherits P2P scaling limitations

- P2MP at the data plane

- Packet replication in the core

RSVP-TE will mostly be interesting for SPs that are already running RSVP-TE for unicast or for SPs involved in video delivery. The following table shows a comparision of the different protocols.

Assigning Flows to LSPs

After the LSPs have been signalled, we need to get traffic onto the LSPs. This can be done in several different ways.

- Static

- PIM

- RFC 6513

- BGP Customer Multicast (C-Mcast)

- RFC 6514

- Also describes Auto-Discovery

- mLDP inband signaling

- RFC 6826

Static

- Mostly applicable to RSVP-TE P2MP

- Static configuration of multicast flows per LSP

- Allows aggregation of multiple flows in a single LSP

PIM

- Dynamically assigns flows to an LSP by running PIM over the LSP

- Works over MP2MP and PPMP LSP types

- Mostly used but not limited to default MDT

- No changes needed to PIM

- Allows aggregation of multiple flows in a single LSP

BGP Auto-Discovery

- Auto-Discovery

- The process of discovering all the PE’s with members in a given mVPN

- Used to establish the MDT in the SP core

- Can also be used to discover set of PE’s interested in a given customer multicast group (to enable S-PSMSI creation)

- S-PMSI = Data MDT

- Used to advertise address of the originating PE and tunnel attribute information (which kind of tunnel)

BGP MVPN Address Family

- MPBGP extensions to support mVPN address family

- Used for advertisement of AD routes

- Used for advertisement of C-mcast routes (*,G) and (S,G)

- Two new extended communities

- VRF route import – Used to import mcast routes, similar to RT for unicast routes

- Source AS – Used for inter-AS mVPN

- New BGP attributes

- PMSI Tunnel Attribute (PTA) – Contains information about advertised tunnel

- PPMP label attribute – Upstream generated label used by the downstream clients to send unicast messages towards the source

- If mVPN address family is not used the address family ipv4 mdt must be used

BGP Customer Multicast

- BGP Customer Multicast (C-mcast) signalling on overlay

- Tail-end driven updates is not a natural fit for BGP

- BGP is more suited for one-to-many not many-to-one

- PIM is still the PE-CE protocol

- Easy to use with SSM

- Complex to understand and troubleshoot for ASM

MLDP Inband Signaling

- Multicast flow information encoded in the mLDP FEC

- Each customer mcast flow creates state on the core routers

- Scaling is the same as with default MDT with every C-(S,G) on a Data MDT

- IPv4 and IPv6 multicast in global or VPN context

- Typical for SSM or PIM sparse mode sources

- IPTV walled garden deployment

- RFC 6826

The natural choice is to stick with PIM unless you need very high scalability. Here is a comparison of PIM and BGP.

BGP C-Signaling

- With C-PIM signaling on default MDT models, data needs to be monitored

- On default/data tree to detect duplicate forwarders over MDT and to trigger the assert process

- On default MDT to perform SPT switchover (from (*,G) to (S,G))

- On default MDT models with C-BGP signaling

- There is only one forwarder on MDT

- There are no asserts

- The BGP type 5 routes are used for SPT switchover on PEs

- There is only one forwarder on MDT

- Type 4 leaf AD route used to track type 3 S-PMSI (Data MDT) routes

- Needed when RR is deployed

- If source PE sets leaf-info-required flag on type 3 routes, the receiver PE responds with with a type 4 route

Migration

If PIM is used in the core, this can be migrated to mLDP. PIM can also be migrated to BGP. This can be done per multicast source, per multicast group and per source ingress router. This means that migration can be done gradually so that not all core trees must be replaced at the same time.

It is also possible to have both mGRE and MPLS encapsulation in the network for different PE’s.

To summarize the different options for assigning flows to LSPs

- Static

- Mostly applicable to RSVP-TE

- PIM

- Well known, has been in use since mVPN introduction over GRE

- BGP A-D

- Useful where head-end assigns the flows to the LSP

- BGP C-mcast

- Alternative to PIM in mVPN context

- May be required in dual vendor networks

- MLDP inband signaling

- Method to stitch a PIM tree to a mLDP LSP without any additional signaling

Optimizing the MDT

There are some drawbacks with the normal operation of the MDT. The tree is signalled even if there is no customer traffic leading to unneccessary state in the core. To overcome these limitations there is a model called the partitioned MDT running over mLDP with the following characteristics.

- Dynamic version of default MDT model

- MDT is only built when customer traffic needs to be transported across the core

- It addresses issues with the default MDT model

- Optimizes deployments where sources are located in a few sites

- Supports anycast sources

- Default MDT would use PIM asserts

- Reduces the number of PIM neighbors

- PIM neighborship is unidirectional – The egress PE sees ingress PEs as PIM neighbors

Conclusion

There are many many different profiles supported, currently 27 profiles on Cisco equipment. Here are some guidelines to guide you in the selection of a profile for NG-MVPN.

- Label Switched Multicast (LSM) provides unified unicast and multicast forwarding

- Choosing a profile depends on the application and scalability/feature requirements

- MLDP is the natural and safe choice for general purpose

- Inband signalling is for walled garden deployments

- Partitioned MDT is most suitable if there are few sources/few sites

- P2MP TE is used for bandwidth reservation and video distribution (few source sites)

- Default MDT model is for anyone (else)

- PIM is still used as the PE-CE protocol towards the customer

- PIM or BGP can be used as an overlay protocol unless inband signaling or static mapping is used

- BGP is the natural choice for high scalability deployments

- BGP may be the natural choice if already using it for Auto-Discovery

- The beauty of NG-MVPN is that profile can be selected per customer/VPN

- Even per source, per group or per next-hop can be done with Routing Policy Language (RPL)

This post was heavily inspired and is basically a summary of the Cisco Live session BRKIPM-3017 mVPN Deployment Models by Ijsbrand Wijnands and Luc De Ghein. I recommend that you read it for more details and configuration of NG-MVPN.

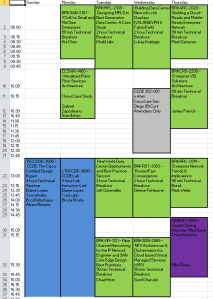

My CLUS 2015 Schedule for San Diego

With roughly two months to go before Cisco Live starts, here is my preliminary schedule for San Diego.

I have two CCDE sessions booked to help me prepare for the CCDE exam. I have the written scheduled on wednesday and we’ll see how that goes.

I have a pretty strong focus on DC because I want to learn more in that area and that should also help me prepare for the CCDE.

I have the Routed Fast Convergence because it’s a good session and Denise Fishburne is an amazing instructor and person.

Are you going? Do you have any sessions in common? Please say hi if we meet in San Diego.