Archive

Detecting Network Failure

Introduction

In todays networks, reliability is critical. Reliability needs to be high and

convergence needs to be fast. There are several ways of detecting network failure

but not all of them scale. This post takes a look at different methods of

detection and discusses when one or the other should be used.

Routing Convergence Components

There are mainly four components of routing convergence:

- Failure detection

- Failure propagation (flooding)

- Topology/Routing recalculation

- Update of the routing and forwarding table (RIB and FIB)

With modern networking networking equipment and CPUs it’s actually the first

one that takes most time and not the flooding or recalculation of the topology.

Failure can be detected at different level of the OSI model. It can be layer 1, 2

or 3. When designing the network it’s important to look at complexity and cost

vs the convergence gain. A more complex solution could increase the Mean Time

Between Failure (MTBF) but also increase the Mean Time To Repair (MTTR) leading

to a lower reliability in the end.

Layer 1 Failure Detection – Ethernet

Ethernet has builtin detection of link failure. This works by sending

pulses across the link to test the integrity of it. This is dependant on

auto negotiation so don’t hard code links unless you must! In the case of

running a P2P link over a CWDM/DWDM network make sure that link failure

detection is still operational or use higher layer methods for detecting

failure.

Carrier Delay

- Runs in software

- Filters link up and down events, notifies protocols

- By default most IOS versions defaults to 2 seconds to suppress flapping

- Not recommended to set it to 0 on SVI

- Router feature

Debounce Timer

- Delays link down event only

- Runs in firmware

- 100 ms default in NX-OS

- 300 ms default on copper in IOS and 10 ms for fiber

- Recommended to keep it at default

- Switch feature

IP Event Dampening

If modifying the carrier delay and/or debounce timer look at implementing IP

event dampening. Otherwise there is a risk of having the interface flap a lot

if the timers are too fast.

Layer 2 Failure Detection

Some layer 2 protocols have their own keepalives like Frame Relay and PPP. This

post only looks at Ethernet.

UDLD

- Detects one-way connections due to hardware failure

- Detects one-way connections due to soft failure

- Detects miswiring

- Runs on any single Ethernet link even inside a bundle

- Typically centralized implementation

UDLD is not a fast protocol. Detecting a failure can take more than 20 seconds so

it shouldn’t be used for fast convergence. There is a fast version of UDLD but this

still runs centralized so it does not scale well and should only be used on a select

few ports. It supports sub second convergence.

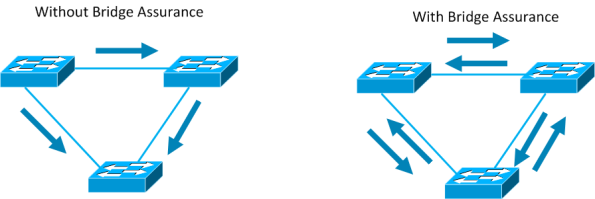

Spanning Tree Bridge Assurance

- Turns STP into a bidirectional protocol

- Ensures spanning tree fails “closed” rather than “open”

- If port type is “network” send BPDU regardless of state

- If network port stops receiving BPDU it’s put in BA-inconsistent state

Bridge Assurance (BA) can help protect against bridging loops where a port becomes

designated because it has stopped receiving BPDUs. This is similar to the function

of loop guard.

LACP

It’s not common knowledge that LACP has builtin mechanisms to detect failures.

This is why you should never hardcode Etherchannels between switches, always

use LACP. LACP is used to:

- Ensure configuration consistence across bundle members on both ends

- Ensure wiring consistency (bundle members between 2 chassis)

- Detect unidirectional links

- Bundle member keepalive

LACP peers will negotiate the requested send rate through the use of PDUs.

If keepalives are not received a port will be suspended from the bundle.

LACP is not a fast protocol, default timers are usually 30 seconds for keepalive

and 90 seconds for dead. The timer can be tuned but it doesn’t scale well if you

have many links because it’s a control plane protocol. IOS XR has support for

sub second timers for LACP.

Layer 3 Failure Detection

There are plenty of protocol timers available at layer 3. OSPF, EIGRP, ISIS,

HSRP and so on. Tuning these from their default values is common and many of

these protocols support sub second timers but because they must run to the

RP/CPU they don’t scale well if you have many interfaces enabled. Tuning these

timers can work well in small and controlled environments though. These are

some reasons to not tune layer 3 timers too low:

- Each interface may have several protocols like PIM, HSRP, OSPF running

- Increased supervisor CPU utilization leading to false positives

- More complex configuration and bandwidth wasted

- Might not support ISSU/SSO

BFD

Bidirectional Forwarding Detection (BFD) is a lightweight protocol designed to

detect liveliness over links/bundles. BFD is:

- Designed for sub second failure detection

- Any interested client (OSPF, HSRP, BGP) registers with BFD and is notified when BFD detects loss

- All registered clients benefit from uniform failure detection

- Uses UDP port 3784/3785 (echo)

Because any interested protocol can register with BFD there are less packets

going across the link which means less wasting of bandwidth and the packets

are also smaller in size which reduces this even more.

Many platforms also support offloading BFD to line cards which means that the

CPU does not get increased load when BFD is enabled. It also supports ISSU/SSO.

BFD negotiates the transmit and receive interval. If we have a router R1

that wants to transmit at 50 ms interval but R2 can only receive at 100 ms

then R1 has to transmit at 100ms interval.

BFD can run in asynchronous mode or echo mode. In asynchronous mode the BFD

packets go to the control plane to detect liveliness. This can also be combined

with echo mode which sends a packet with a source and destination IP of the

sending router itself. This way the packet is looped back at the other end

testing the data plane. When echo mode is enabled the control plane packets

are sent at a slower pace.

Link bundles

There can be challenges running BFD over link bundles. Due to CEF polarization

control plane/data plane packets might only be sent over the same link. This

means that not all links in the bundle can be properly tested. There is

a per link BFD mode but it seems to have limited support so far.

Event Driven vs Polled

Generally event driven mechanisms are both faster and scale better than polling

based mechanisms of detecting failure. Rely on event driven if you have the option

and only use polled mechanisms when neccessary.

Conclusion

Detecting a network failure is a very important part of network convergence. It

is generally the step that takes the most time. Which protocols to use depends

on network design and the platforms used. Don’t enable all protocols on a link

without knowing what they actually do. Don’t tune timers too low unless you

know why you are tuning them. Use BFD if you can as it is faster and uses

less resources. For more information refer to BRKRST-2333.

Scaling PEs in MPLS VPN – Route Target Constraint (RTC)

Introduction

In any decent sized service provider or even an enterprise network running

MPLS VPN, it will most likely be using Route Reflectors (RR). As described in

a previous post iBGP fully meshed does not really scale. By default all

PEs will receive all routes reflected by the RR even if the PE does not

have a VRF configured with an import matching the route. To mitigate this

ineffecient behavior Route Target Constraint (RTC) can be configured. This

is defined in RFC 4684.

Route Target Constraint

The way this feature works is that the PE will advertise to the RR which RTs

it intends to import. The RR will then implement an outbound filter only sending

routes matching those RTs to the PE. This is much more effecient than the default

behavior. Obviously the RR still needs to receive all the routes so no filtering

is done towards the RR. To enable this feature a new Sub Address Family (SAFI) is

used called rtfilter. To show this feature we will implement the following topology.

The scenario here is that PE1 is located in a large PoP where there are already plenty

of customers. It currently has 255 customers. PE2 is located in a new PoP and so far only

one customer is connected there. It’s unneccessary for the RR to send all routes to PE2

for all of PE1 customers because it does not need them. To simulate the customers I wrote

a simple bash script to create the VRFs for me in PE1.

#!/bin/bash

for i in {0..255}

do

echo "ip vrf $i"

echo "rd 1:$i"

echo "route-target 1:$i"

echo "interface loopback$i"

echo "ip vrf forwarding $i"

echo "ip address 10.0.$i.1 255.255.255.0"

echo "router bgp 65000"

echo "address-family ipv4 vrf $i"

echo "network 10.0.$i.0 mask 255.255.255.0"

done

PE2 will not import these due to that the RT is not matching any import statements in

its only VRF that is currently configured. If we debug BGP we can see lots of messages

like:

BGP(4): Incoming path from 4.4.4.4 BGP(4): 4.4.4.4 rcvd UPDATE w/ attr: nexthop 1.1.1.1, origin i, localpref 100, metric 0, originator 1.1.1.1, clusterlist 4.4.4.4, extended community RT:1:104 BGP(4): 4.4.4.4 rcvd 1:104:10.0.104.0/24, label 120 -- DENIED due to: extended community not supported;

In this case we have 255 routes but what if it was 1 million routes? That would be

a big waste of both processing power and bandwidth, not to mention that the RR would

have to format all the BGP updates. These are the benefits of enabling RTC:

- Eliminating waste of processing power on PE and RR and waste of bandwidth

- Less VPNv4 formatted Updates

- BGP convergence time is reduced

Currently the RR is advertising 257 prefixes to PE2.

RR#sh bgp vpnv4 uni all neighbors 3.3.3.3 advertised-routes | i Total Total number of prefixes 257

Implementation

Implementing RTC is simple. It has to be supported on both the RR and the PE though.

Add the following commands under BGP:

RR:

RR(config)#router bgp 65000 RR(config-router)#address-family rtfilter unicast RR(config-router-af)#nei 3.3.3.3 activate RR(config-router-af)#nei 3.3.3.3 route-reflector-client

PE2:

PE2(config)#router bgp 65000 PE2(config-router)#address-family rtfilter unicast PE2(config-router-af)#nei 4.4.4.4 activate

The BGP session will be torn down when doing this! Now to see how many routes the RR is

sending.

RR#sh bgp vpnv4 uni all neighbors 3.3.3.3 advertised-routes | i Total Total number of prefixes 0

No prefixes! To see the rt filter in effect use this command:

RR#sh bgp rtfilter unicast all

BGP table version is 3, local router ID is 4.4.4.4

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale, m multipath, b backup-path, f RT-Filter,

x best-external, a additional-path, c RIB-compressed,

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

0:0:0:0 0.0.0.0 0 i

*>i 65000:2:1:256 3.3.3.3 0 100 32768 i

Now we add an import under the VRF in PE2 and one route should be sent.

PE2(config)#ip vrf 0

PE2(config-vrf)#route-target import 1:1

PE2#sh ip route vrf 0

Routing Table: 0

Codes: L - local, C - connected, S - static, R - RIP, M - mobile, B - BGP

D - EIGRP, EX - EIGRP external, O - OSPF, IA - OSPF inter area

N1 - OSPF NSSA external type 1, N2 - OSPF NSSA external type 2

E1 - OSPF external type 1, E2 - OSPF external type 2

i - IS-IS, su - IS-IS summary, L1 - IS-IS level-1, L2 - IS-IS level-2

ia - IS-IS inter area, * - candidate default, U - per-user static route

o - ODR, P - periodic downloaded static route, H - NHRP, l - LISP

+ - replicated route, % - next hop override

Gateway of last resort is not set

10.0.0.0/8 is variably subnetted, 3 subnets, 2 masks

B 10.0.1.0/24 [200/0] via 1.1.1.1, 00:00:16

C 10.1.1.0/24 is directly connected, Loopback1

L 10.1.1.1/32 is directly connected, Loopback1

RR#sh bgp vpnv4 uni all neighbors 3.3.3.3 advertised-routes | i Total

Total number of prefixes 1

RR#sh bgp rtfilter unicast all

BGP table version is 4, local router ID is 4.4.4.4

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale, m multipath, b backup-path, f RT-Filter,

x best-external, a additional-path, c RIB-compressed,

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

0:0:0:0 0.0.0.0 0 i

*>i 65000:2:1:1 3.3.3.3 0 100 32768 i

*>i 65000:2:1:256 3.3.3.3 0 100 32768 i

Works as expected. From the output we can see that the AS is 65000, the extended

community type is 2 and the RT that should be exported is 1:1 and 1:256.

Conclusion

Route Target Constraint is a powerful feature that will lessen the load on both your

Route Reflectors and PE devices in an MPLS VPN enabled network. It can also help

with making BGP converging faster. Support is needed on both PE and RR and the BGP

session will be torn down when enabling it so it has to be done during maintenance

time.

iBGP – Fully meshed vs Route Reflection

Intro

This post looks at the pros and cons with BGP Route Reflection compared to running

an iBGP full mesh.

Full mesh

Because iBGP routes are not propagated to iBGP sessions there must be a full mesh

inside the BGP network. This leads to scalability issues. For every N routers

there will be (N-1) iBGP neighbors and (N*(N-1))/2 BGP sessions. For a medium

sized ISP network with 100 routers running BGP this would be 99 iBGP neighbors

and 4950 BGP sessions in total.

There are 4 routers in AS 2 which gives 3 iBGP neighbors and 6 iBGP sessions in total.

Benefits of a full mesh:

- Optimal Traffic Forwarding

- Path Diversity

- Convergence

- Robustness

Optimal Traffic Forwarding:

Because all BGP speaking routers are fully meshed they will receive iBGP updates

from all peers. If no manipulating of attributes have been done then the tiebreaker

will be the metric to the next-hop (IGP) so traffic will take the optimal path.

Path Diversity:

Due to the full mesh the BGP speaking router will have multiple paths to choose

from. If it was connected to a RR it would generally only have one path, the one

the RR decided was the best.

Convergence:

Because the BGP speaking router has multiple paths if the current best one should fail

it can start using one of the alternate paths. Also the BGP UPDATE messages are sent

directly between the iBGP peers instead of passing through an additional router (RR)

which would have to process it and the packets would have to travel additional distance

unless the RR is located in the same PoP as the routers.

Robustness:

If one BGP speaking router fails then only the networks behind that router are

not reachable any longer. If a RR fails then all networks that were reachable via

clients to that RR would no longer be reachable.

Caveats of a full mesh:

- Lack of Scalability

- Management Overhead

- Duplication of Information

Lack of Scalability:

Having hundreds of BGP sessions on all routers would mean a lot of BGP processing.

The number of BGP Updates coming in would be massive.

This would put a great burden on the CPU/RP of the router. For really large networks

this could potentially be more than the router can handle. In a network with 300 routers

there would be 44850 iBGP sessions. The RIB-in size would be very large because of the

large number of peers.

Management Overhead:

Adding a new device to the network means reconfiguring all the existing devices.

Configurations would be very big considering all the lines needed to setup the

full mesh.

Duplication of Information:

For every external network there could potentially be multiple paths internally

leading to using lots of RIB/FIB space on the devices. It does not make much sense

to install all paths into RIB/FIB.

Benefits of Route Reflection:

- Scalability

- Reduced Operational Cost

- Reduced RIB-in Size

- Reduced Number of BGP Updates

- Incremental Deployability

Scalability:

The number of iBGP sessions needed is greatly reduced. A client only needs one session

or preferably two to have route reflector redundancy. A route reflector needs

(K*(K-1))/2 + C where K is the number of route reflectors and C is the number of

clients. The route reflectors still need to be in full mesh with each other.

Reduced Operational Cost:

With a full mesh when adding a new device it requires reconfiguring all the existing

devices. This requires operator intervention which is an added cost. With route reflection

when adding a new device only the new device and the RR it peers with needs new configuration.

Reduced RIB-in Size:

RIB-in contains the unprocessed BGP information. After processing this information

the best paths are installed into the Loc-RIB. The RIB-in grows proportionally with

the number of neighbors that the router peers with. If there is n routers and p prefixes

then the router would have a RIB-in that is of size n * p. In a full mesh n is very high

but with route reflection n is only the number of RRs that the router peers with.

Reduced Number of BGP Updates:

In a full mesh a router will receive N – 1 updates where N is the number of routers.

This is a large amount of updates. With route reflection N is small since this is

only the number of route reflectors the router peers with.

Incremental Deployability:

Route reflection does not require massive changes in the existing network like with

confederations. It can be deployed incrementally and routers can be migrated to the

RR topology gradually. Not all routers need to be moved at once.

Caveats of Route Reflection:

- Robustness

- Prolonged Routing Convergence

- Potential Loops

- Reduced Path Diversity

- Suboptimal Routes

Robustness:

With a full mesh if a single router fails that only impacts the networks behind

that router. If a route reflector fails it affects all the networks that were

behind all of the route reflectors clients. To avoid single points of failure,

RRs are usually deployed in pairs.

Prolonged Routing Convergence:

In a full mesh every BGP update only travels a single hop. With route reflection

the number of hops is increased and if the route reflectors are setup in a

hierarchical topology the update could travel through several RRs. Every RR

will add some processing delay and propagation delay before the update reaches

the client.

Potential Loops:

In a topology where clients are connected to a single RR there should be no

data plane loops. When clients are connected to two RRs there is a risk

of a loop forming if the control plane topology does not match the physical

topology. Because of that it is important to try to match the two topologies.

Reduced Path Diversity:

In a full mesh if there are multiple paths to an external network then

all paths will be announced and the local router makes a decision which one

is the best. With route reflection the RR makes the decision which path is

the best and announces this path only. This leads to fewer paths being

announced which could lead to longer convergence delays.

There are drafts for announcing more than one best path which would help

with this issue. Some newer IOS releases supports this feature.

Suboptimal routes:

The RR will select a best path based on its own local routing information.

This could lead to routers using suboptimal paths because there may be

a shorter path available from a routers perspective but this is not the

path that the RR had chosen. Therefore it’s important to consider where

the RRs are placed.

Conclusion

This post takes a look at the benefits and caveats of a fully meshed iBGP network

vs route reflection. Although because of scalability it’s almost impossible to not

go with route reflection one should still consider the caveats of route reflection.

It’s important to consider the placement and the number of RRs in the topology.

This post is the first of posts that will focus on CCDE topics.

BGP wedgies – Why isn’t my routing policy having effect?

Intro

Brian McGahan from INE introduced me to something interesting the other day.

BGP wedgie, what is that? I had never heard of it before although I’ve heard

of such things occuring. A BGP wedgie is when a BGP configuration can lead

to different end states depending on in which order routes are sent. There is

actually an RFC for this – RFC 4264.

Peering relationships

To understand this RFC you need to have some knowledge of BGP and the different

kind of peering relationships between service providers and customers.

Service providers are usually described as Tier 1 or Tier 2. A Tier 1 service provider

is one that does not need to buy transit. They have private peerings with other service

providers to reach all networks in the Default Free Zone (DFZ). This is the

theory although it’s difficult in the real world to see who is Tier 1 or not.

Tier 2 service providers don’t have private peerings to reach all the networks so they

must buy transit from one or more Tier 1 service providers. This is a paid

service.

Service providers have different preference for routes coming in. The most

preferred routes are those coming from customers. After that it is preferred

to send traffic over private peerings since in theory this should be cheaper than

transit. The least preferred is to send traffic towards your transit.

Why is my policy not working?

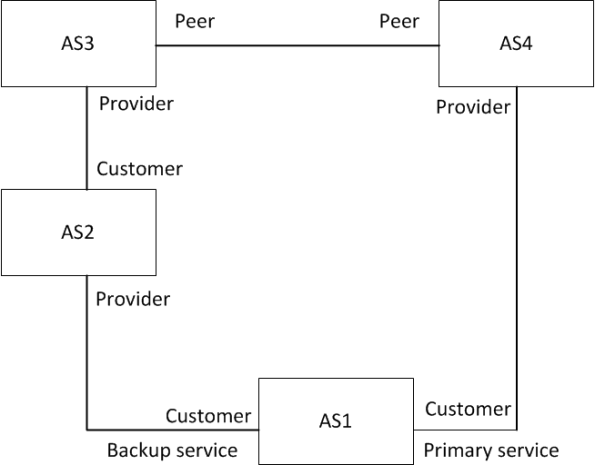

Assume that you are a customer buying capacity from two service providers.

You want to use one service provider as primary and one as secondary.

This is usually done by sending a community towards your secondary provider

which then sets local preference. Keep in mind that providers will still have

their best economic result in mind though. Take a look at the following diagram.

We will be configuring AS1. We want to have the network 1.1.1.0/24 as primary

by AS4 and secondary by AS2. We will use communities to achieve this. We

setup the primary path first.

This is the configuration of AS1 so far:

router bgp 1 no synchronization bgp log-neighbor-changes neighbor 12.12.12.2 remote-as 2 neighbor 12.12.12.2 description backup neighbor 12.12.12.2 shutdown neighbor 12.12.12.2 send-community neighbor 12.12.12.2 route-map set-backup out neighbor 14.14.14.4 remote-as 4 neighbor 14.14.14.4 description primary no auto-summary ! ip bgp-community new-format ! route-map set-backup permit 10 set community 2:50

The backup will be turned up later.

Looking from AS2 perspective we now have the correct path.

AS2#sh bgp ipv4 uni

BGP table version is 2, local router ID is 23.23.23.2

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*> 1.1.1.0/24 23.23.23.3 0 3 4 1 i

AS2#traceroute 1.1.1.1

Type escape sequence to abort.

Tracing the route to 1.1.1.1

1 AS3 (23.23.23.3) 80 msec 36 msec 20 msec

2 AS4 (34.34.34.4) 64 msec 56 msec 48 msec

3 AS1 (14.14.14.1) 84 msec * 68 msec

Now the backup service is turned up.

AS1(config-router)#no nei 12.12.12.2 shut AS1(config-router)# %BGP-5-ADJCHANGE: neighbor 12.12.12.2 Up

AS2 still prefers the correct path due to local preference.

AS2#sh bgp ipv4 uni

BGP table version is 2, local router ID is 23.23.23.2

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

* 1.1.1.0/24 12.12.12.1 0 50 0 1 i

*> 23.23.23.3 0 3 4 1 i

AS2#traceroute 1.1.1.1

Type escape sequence to abort.

Tracing the route to 1.1.1.1

1 AS3 (23.23.23.3) 84 msec 44 msec 20 msec

2 AS4 (34.34.34.4) 56 msec 60 msec 44 msec

3 AS1 (14.14.14.1) 100 msec * 100 msec

AS3 and AS4 has the following route-map to increase local pref for customer

routes.

AS3#sh route-map

route-map customer, permit, sequence 10

Match clauses:

Set clauses:

local-preference 150

Policy routing matches: 0 packets, 0 bytes

Now what happens if there is a failure between AS1 and AS4?

AS2 now only has one paith available.

AS2#sh bgp ipv4 uni

BGP table version is 3, local router ID is 23.23.23.2

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*> 1.1.1.0/24 12.12.12.1 0 50 0 1 i

This is advertised to R3 which sets local preference to 150.

AS3#sh bgp ipv4 uni

BGP table version is 4, local router ID is 34.34.34.3

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*> 1.1.1.0/24 23.23.23.2 150 0 2 1 i

Now the primary circuit comes back. AS3 will prefer to go via AS2 because

that is a customer route.

AS3#sh bgp ipv4 uni

BGP table version is 4, local router ID is 34.34.34.3

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

* 1.1.1.0/24 34.34.34.4 0 4 1 i

*> 23.23.23.2 150 0 2 1 i

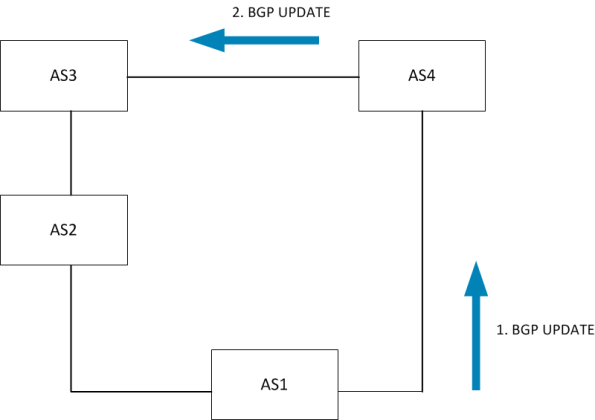

We now have a BGP wedgie. The same BGP configuration has generated two

different outcomes depending on the order of which the routes were announced.

The only way of breaking the wedgie is now to stop announcing the backup. Let

the network converge and then bring up the backup again. AS2 now has the correct

path again.

AS2#sh bgp ipv4 uni

BGP table version is 5, local router ID is 23.23.23.2

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

* 1.1.1.0/24 12.12.12.1 0 50 0 1 i

*> 23.23.23.3 0 3 4 1 i

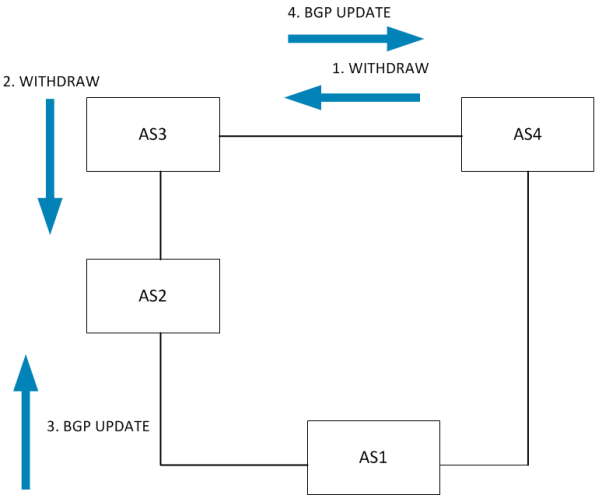

So to describe what is actually looking take a look at this diagram.

The number describes in what order the UPDATE is sent. AS2 has two paths but

the one directly to AS1 has a local pref of 50 due to AS1 using it as a backup.

This means that AS2 does not send this path to AS3 so AS3 has to use the path

via AS4. This is the key. Now what happens when the circuit between AS1 and AS4

fails?

The key here is step 3 where AS2 sends it only current path to AS3. AS3 will then

set local preference to 150 because this is a customer route. Then the primary

circuit comes back.

AS1 announces the network to AS4. AS4 announces this to AS3. AS3 does NOT

advertise this to AS2 because it already has a best path via AS2 where

the local preference is 150. This means that the network can not converge

to the primary path until the backup path has been removed.

Conclusion

BGP is a distance vector protocol and sometimes the same configuration can

give different outcomes depending on which order updates are sent. Have

this in mind when setting up BGP and try to learn as much as possible about

your service providers peerings.